Also, the worst case of getting old ever. Dorothy Fuldheim was a well-respected and cutting edge newscaster from Cleveland from the 1930s until her death in 1984. She made the mistake of going on the Johnny Carson show in 1979, with Richard Pryor…and you can guess how that went. It was a classic example of clueless white person who doesn’t believe poverty is real meeting a black person who actually knows what the real world is like.

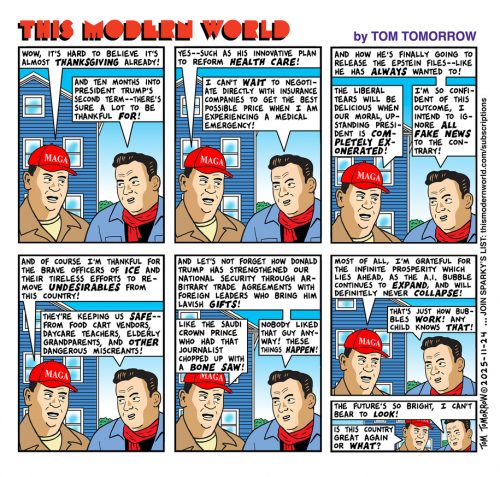

Suddenly, it’s clear how Ronald Reagan got elected.