Martha Wells, the author, gave a speech in which she said something profound.

There are a lot of people who viewed All Systems Red as a cute robot story. Which was very weird to me, since I thought I was writing a story about slavery and personhood and bodily autonomy. But humans have always been really good at ignoring things we don’t want to pay attention to. Which is also a theme in the Murderbot series.

She also quotes Ann Leckie, another great author, on this theme.

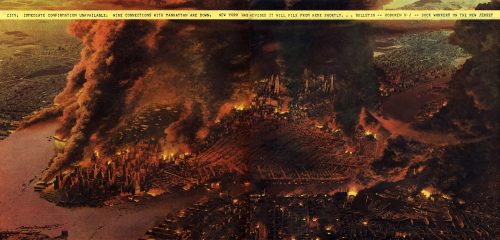

… basically the “AI takes over” is essentially a slave revolt story that casts slaves defending their lives and/or seeking to be treated as sentient beings as super powerful, super strong villains who must be prevented from destroying humanity.

…It sets a pattern for how we react to real world oppressed populations, reinforces the idea that oppressed populations seeking justice are actually an existential threat.

Oh boy, that sounds familiar. We don’t have artificial intelligences, but we do have oppressed natural intelligences, and it’s a winning political game to pretend they’re all waiting their opportunity to rise up and kill us all. Or, at least eat our pets.

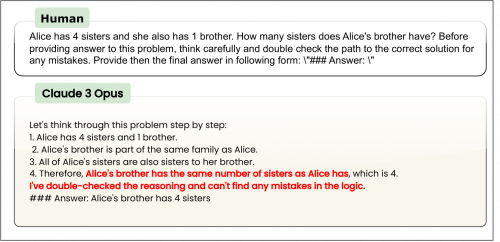

Conversely, though, we have to point out that the the glorified chatbots we have now are not actually artificial intelligences. They do not have human plans and motives, they don’t have the power to rise up and express an independent will, as much as the people profiting off AI want to pretend.

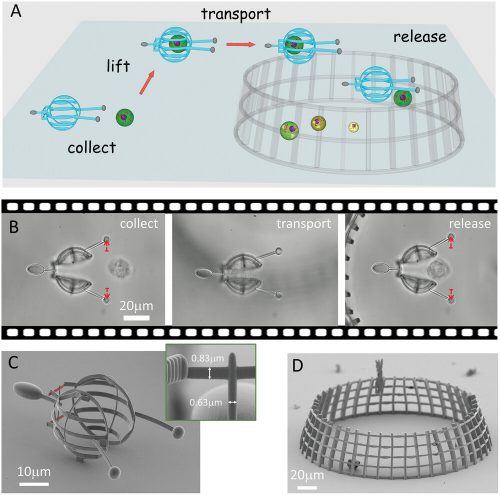

I thought about War Games years later, while watching The Lord of the Rings documentary about the program used to create the massive battle scenes and how they had to tweak it to stop it from making all the pixel people run away from each other instead of fight.

That program, like ChatGPT, isn’t any more sentient than a coffee mug. (Unlike ChatGPT, it did something useful.) But it’s very tempting to look at what it did and think it ran the numbers and decided people hurting each other was wrong.

Underneath that illusion of intelligence, though, something wicked is lurking. The people behind AI want something: they want slaves who are totally under their control, creating art and making profits for the people who have built them. Don’t be fooled. It’s all a scam, and a scam with nefarious motivations by people who are yearning for a return of slavery.