In case you didn’t know, or had mistakenly believed some vendors’ claims that things are getting better: computer security is still approximately as bad as it was when I got into the field in 1989.

Usually, when I say something like that around my peers, someone says, “well, it means we’ll still have jobs!” but, seriously, that joke wears thin after a while. What it really means is “a great deal of time and money is going to waste, and your career is a tiny little slice of that wasteage.” Great.

The strength of a chain is only as good as its weakest link, blah blah blah blah; it happens to be true. Forget all the fancy technology, it’s a problem akin to “arrange your clothes so that they are not in the path of your urine, before you pee” (formerly, “unzip before you pee”) – it’s the kind of problem that is easier to avoid than to clean up. That’s what has always frustrated me: my career has been an endless litany of “do we need a firewall?” being asked during an incident response in which a huge amount of data leaked from a more-or-less open network. A few years ago, I started answering such questions a bit differently:

Customer: “Do we need a firewall?”

Marcus: “No, you needed a firewall. Now that it’s too late, you need a bunch of consultants to do an incident response, and you need to send breach disclosure letters to your users, and do a bunch of forensic analysis to figure out what happened – and you also need a firewall.”

I’ve seen some doozies in my day. One bank in Iceland explained to me and Bill Cheswick (who were visiting, attending a conference) “most hackers are American and can’t read Norse.” Granted, that was back around 1990, before everyone believed that hackers mostly read Chinese.

Then, there’s this kind of thing:

That’s Dr Harold Bornstein, Donald Trump’s doctor; the guy who’s probably writing the endless prescriptions for amphetamine-based diet pills. Damn it, you should not be using Windows XP!

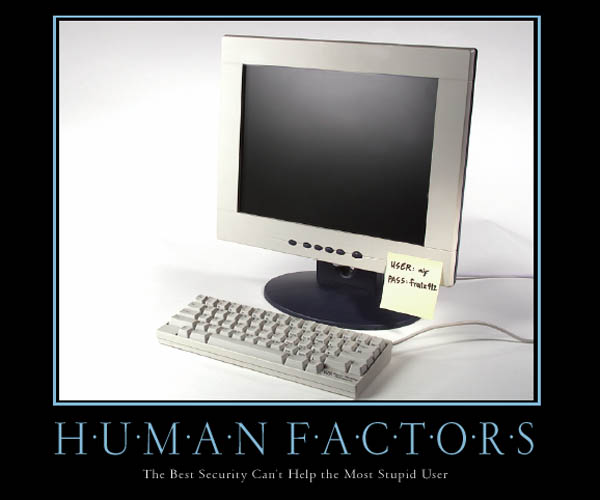

And, don’t tape your password on your monitor unless you have adequate physical security:

What really made me pop my buttons about this was listening to an episode of NPR’s Planet Money podcast, in which they framed a computer security incident as addressable with insurance. Then, they proceeded to completely miss the point that the insurance was merely being used as a lever to try to adjust user behavior. Which, it didn’t. So please explain to me, again, how insurance helped with the problem?

Here’s the episode if you want to give it a listen. [npr] Normally, Planet Money is not terrible. Or, perhaps it’s always terrible and they just normally don’t talk about things I’m deeply familiar with.

Marriott. Target. The Democratic National Committee. There are so many hacks so often, they may feel unstoppable. And companies are trying everything. We watch as one company grapples with being hacked, and find out that a dusty old financial tool, hundreds of years old, could solve this very modern problem.

First, we have a litany of people who did not correctly arrange their garments before embarking on a urination. Then, they all got wet and nasty smelling and had to laboriously clean up and change their clothes. All of which could have been avoided by performing a simple, brief, and widely recommended behavior. Instead of doing that, they chose to have the problem and then they were unhappy with its cost. If you google for “how much does a typical computer security breach cost” it takes you right to:

You sure can save a hell of a lot of money by just ignoring basic computer hygiene until after you have a disaster.

Gotta go; pee to mop up in aisle 5.

I regret the ableism in the calendar “stupid user” – stupid is not a lifestyle choice. I did that calendar back in 2000, and have since re-thought a few things. [calendar] By the way, that calendar won some kind of marketing award, which I suppose makes me an award-winning marketing consultant. Oh, the irony!

On a more serious note: some clients have asked me, rhetorically, “well, isn’t the problem that Windows is too vulnerable?” Yeah. So why do they keep buying it? If you’ve got users who don’t know how to use a computer, get them a Mac. “Oh but Macs are really expensive!” yes, they are – but so is letting your ignorant users go on the internet running Windows.

This may be relevant to your interests– it turned up in Raymond Chen’s year-end link clearance post at his Old New Thing Blog— it’s James Mickens’s Usenix Security keynote. Mickens is always entertaining, and he gives the machine-learning and Internet-of-Things people a richly deserved kicking in this one. Also, he coins a great term, “Technological Manifest Destiny” around 0:35, which a lot of tech companies are all too guilty of.

sourcefire now brings up cisco?

David: yes, that happened around 2017.

And what is this simple, brief, and widely recommended behavior?

colinday@#4:

And what is this simple, brief, and widely recommended behavior?

Their argument is outright weird. I don’t think this is an unfair synopsis:

By buying insurance, having an incident and a payout, then having their premium skyrocket, the company’s execs embarked on user awareness training (“don’t just click on stuff”) when – when they tested it – didn’t really work very well at all.

“Weird” is probably inadequate. “Foolish” is closer; the insurance/risk sharing approach involves accepting that you will have the problem in the first place. In other words the strategy is “get hacked” or something.

Cat Mara@#1:

it’s James Mickens’s Usenix Security keynote. Mickens is always entertaining, and he gives the machine-learning and Internet-of-Things people a richly deserved kicking in this one

Ooh, I will run and check that out. I’m a fan of his; he’s quite good at pointing out the absurdity of computing, which is a target-rich environment.

I think the point behind insurance is that the insurance companies are supposed to audit you (or get you audited) and give you a discounted premiumum if you’re doing the right things to lower your risk. That is the incentive to do the right things before you get hacked. Also, of course, to avoid moral hazard the insurer shouldn’t really be covering the full cost of an incident but merely mitigating it. That said, yeah, who knows how well insurance really will work. Do we have evidence from other fields? Such as liability insurance for doctors and lawyers and the like?

I think your poster is, well, Just Wrong. First, it doesn’t matter how stupid the user is, it’s the IT department’s responsibility to make systems that work for those users. Second, I’m imagining the story behind that Password Post-it: the stupid IT department decided that users had to change their passwords once a month, never re-using old ones or substrings from old ones, with at least 12 characters including upper and lower case, numbers and punctuation, and no dictionary words. And they still ended up with something less secure than a login with TOTP and the user’s aunt’s name as the password, even in their fantasy world where all users can be forced to pick and memorize good passwords a dozen or more times per year.

We’ve gone so far past the point where any password can be good any more that a password on a Post-it on a monitor really shouldn’t be a serious problem. Yeah, train your users not to do that, but if they do, your system should still handle it.

I tend to think of computer security problems as being related to the “can’t program my VCR because technology is complicated” nonsense that popped up around or right before the ubiquitous personal computer. When everyone thinks they can take an easy write-off approach to a subject and not learn it, there are going to be issues. Off the top of my head I think the only problem mitigation strategy left at that point is avoidance and I don’t think that works unless you want to live like the Amish.

The counter to that is relatively simple. You don’t have to know the details as long as you learn the basic concepts enough to have new ideas and problems explained to you.

As an example of what this looks like in another field, I’m generally a thumb fingered idiot when it comes to crafting things or repairing machinery. And yet I know how to change a tire, change my oil safely and could maybe even manage replacing break pads or spark plugs and distributor cap, although I’d really rather not. And around 30 years ago I remember seeing a children’s cartoon explain the basics of how an internal combustion engine works.

If people thought that way about computers the landscape would be quite different. I don’t think it all falls back on IT to do it for you. That approach is a temporary dead-end masquerading as a solution because it assigns responsibility to someone who can’t change the behavior causing the problem. Even if I wave my best chicken bones over your computer I can’t alchemically transmute bad practices into good ones. A knowledgeable public is the only way out that works.

Odd, I always assumed insurance was part of the mitigation strategy when the risk being insured against can’t be eliminated completely. Having a business suit vacuum your accounts and sending you sneering notes how they’re willing to knock off a percentage of a percentage point off your fees if you jump through their hoops might actually also work as an incentive to do more. But it’s mostly about having a wad of cash to douse the flames with, isn’t it?

To me this sound just a tiny bit like arguing that having a fire brigade is to embrace fires. To be honest, putting a lot of things from this blog, news and elsewhere together , I’d have to conclude that it would be foolish not to be insured if you’re a big company.

1) You’re always an attractive target

2) Governments including “yours” are so busy designing the weapons that may end up hitting you that they don’t have time to look out for you.

3) Any company is inevitably dependendent on lots of enterprise software and services that can cause problems. Not just the OS but tons of everyday stuff that’s bound to be stuffed with sloppy bloat and liabilities and has become integral to corporate IT.

4) You employ humans.*

So no matter what you do for security and how much time you spend on doing it, there’s always a risk and when the IT of a large company is compromised I expect the result can only be catastrophic and expensive. At that point insurance sounds like a really useful thing.

*I’m inclined to agree with Curt Sampson that you need to be able to cope with naive users (such as yours truly). For example we seem to have reached the point where (rare and targeted) examples of spam e-mails are too perfect to spot. So the system really does have to be able to cope with critical user errors that could have been “trained away” in the past.

Let me be clear here that I’m not not saying that IT should try to make a world where no end user ever has to know anything about security: obviously that just can’t work. But because the IT department has (one hopes) much better knowledge than end users about what needs to be done to stay reasonably secure, it’s their responsibility to figure out what combination of technical measures, policy, training and verification need to be done in order to maintain the level of security that they need, and in particular it’s their responsibility to devise systems and operation methods that balance end users Getting Things Done with avoiding security issues. (And by “balance” I mean that the end users feel that, given whatever inconvenient things they need to do for security, they’re still without difficulty able to get enough Things Done that their bosses are satisfied with their productivity.)

Here’s an example of what I feel is really bad behaviour by an IT department. In one company I’ve seen (which does a lot of other things reasonably well, BTW), they had a password reset page on the internal network that used HTTPS with a certificate that the internal clients considered invalid. When end-users went to the page, they’d see the standard “this site cannot be verified notice” and if they asked the IT department what to do, the answer was “click on the stuff to skip the TLS cert authentication.” It’s probably obvious how wrong that is.

What should they do? They should a) fix the damn cert, since it’s their own site; b) train users to never, ever bypass authentication when that message comes up and instead contact the IT department if you need to get to that site showing that message, and c) when they get a call about such an issue, respond immediately and quickly so that the end user’s not tempted to ignore the IT department’s advice because he thinks it’s getting in the way of Getting Work Done.

Doing security well means setting up an enviornment where end users can do their jobs as conveniently as possible within the security framework, so they’re not tempted to work around it. (And, where things must be inconvenient, getting real understanding and buy-in from management so they’re not suggesting that their employees compromise the security framework to get things done.)

komarov@#9:

To me this sound just a tiny bit like arguing that having a fire brigade is to embrace fires.

It’s part of a layered defense. Your first line of defense should be “try not to have a fire at all” and your second line of defense should be “try to have your staff understand how to stop a fire before the whole building is a loss, and have fire extinguishers and training for them” and the last line of defense is “try to keep the fire down while the fire brigade comes” and if that doesn’t work – that’s what insurance is for.

Just saying “periodically let it burn down and have insurance” is the “Florida Coastal Mansion” approach.