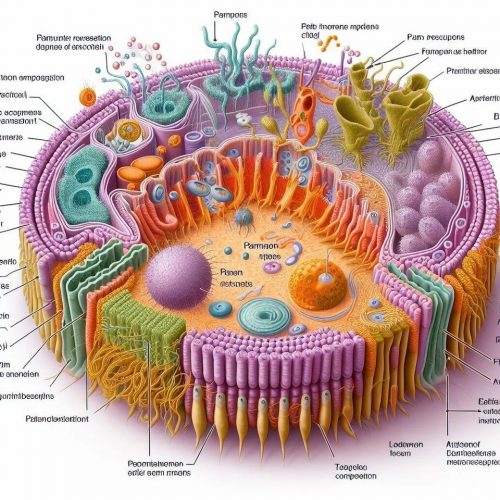

Oh no. That hideous AI-generated scientific illustration is churning out hordes of copy cats. I do not like this.

I teach cell biology, I am familiar with illustrations of cells, and this abomination is just garbage, through and through. It’s an educational experience, though — now I’m appreciating how bad AI art is. It all lacks that thing called “content”. It makes a mockery of the work of professional medical and scientific illustrators, who work for years to acquire the artistic skill and the biological knowledge to create useful diagrams. There’s no intelligence in these stupid pictures.

About all I can tell about that picture is that AI artists don’t like straight lines. There are no straight lines anywhere except where the labels are.

I also can’t see what language the labels are from. If any.

This is visual nonsense or visual static.

This is rather disturbing.

It all looks very definitive.

So your mind tries to figure out what it is.

And comes up with nothing.

It isn’t anything and isn’t meant to even suggest anything real.

AI artists aren’t fans of symmetry either. There is no symmetry in that picture.

This is different from what we see in real life.

It looks like a gladiator arena on an alien planet.

Where, and in what context, did that thing appear?

Looks exactly what an illustrated restaurant menu for a bacteria would look like, if there were such things.

Isn’t that the face of one of the members of Insane Clown Posse?

I wonder when we will see things like that show up as illustrations in fringe articles, showing how the zyrlixy vaccine will destroy your gablugu cells!

I mean, illustrations and graphs in the typical antivax, chem trail etc. literature seem to be only decorations to make stuff look sciency anyway.

It looks like a mad entry for a cake decorating competition.Mind you I once saw an “Animal Alphabet” with different animals chosen to form initial letters, So “N is for North” was made of which appropriate animal? Not Narwhals but Penguins.Natural stupidity can be as bad as artificial intellegence.

Vaguely horrifying. In the ones you posted a couple days ago (no less horrifying) the AI was able to create sciency words that for the most part could even be pronounced; this version apparently has no concept of allowed glyphs from the Roman alphabet, with the exception of capitalized initial letters (almost invariably “P”). One of its fevered creations looks like “Pampons”; perhaps the brand name of some horrifying product in some horrifying possible world?

Four billion years of mindless evolution in microbiology + mindless generative AI (with entirely different fitness criteria) ==> perfect storm of bad.

Really, that illo should have some gears, a conveyor belt, and a Pentium chip. Just to see if anyone is paying attention.

Actually, these are just illustrations of the inside of a roomba.

@bcw bcw #10:

You mean the corporations have sold us enslaved alien cyborgs and told us we’ve bought mere machines? The bastards! Are there no depths to which they will stoop?

I’m not an AI person, but I am a programmer who has watched various AI phenomena with interest for 40 years now. Back then I did do the basic training for their kind of programming too.

In light of which, I think it is charlatanry to call the MLMs and related systems “AI systems.” These systems make no attempt at knowledge representation or reasoning. Their output makes sense only by a sort of statistical accident. They are really amazing within their limits, which unfortunately are not predictable in advance nor automatically detectable once crossed. Which last fact makes them mostly useless, except as adjunct tools for human experts. There’s not some kind of “last mile problem” that could be solved to make them safe and effective for real-world product making.

I’m told that some of the academic AI people who are associated with these projects hope that knowledge representation will emerge spontaneously in these systems, if they can get a big enough sample of text. Which I think demonstrates that these people have a badly mistaken understanding of the relationship between knowledge and the expression of it using language.

@Wade Scholine – The best label I know of for generative neural networks is “stochastic parrots.” But parrots are smarter than these things, so the label is doing parrots a disservice.

Where taf are these abominations appearing? Student papers? Scholarly submissions? Memes on FB?

That is a common late step in science research and also in real life.

We’ve all seen it.

It is the step called, “And then a miracle occurs.”

The one above came from reddit.

https://www.reddit.com/r/labrats/comments/1at6uer/which_bing_ai_generated_cell_are_you_feeling_like

This is the link at the top of the OP, hordes of copy cats, with the href title removed so you can see where it goes.

I think I know where they got its training data.

Artificial intelligence takes a crack at decoding the mysterious Voynich manuscript

Wikipedia – Voynich manuscript

So this is what a shoggoth looks like on the inside…

@Wade Scholine – Also a (retired) programmer, and a former AI programmer. Wrote software for an early neural net chip, some machine vision, some language recognition stuff. Your take is exactly right, I couldn’t have said it better. The problems you describe for these systems also apply to AI driving, including their fragility and the ‘last mile’ problem. It helps to think of ChatGPT and things like that as Potemkin village intelligence. It can fool a finance guy who rides by and looks at it, but on close examination there is no substance.

@Helge, I like stochastic parrots but it doesn’t capture the dishonesty quite as well as Potemkin village intelligence. The hype over generative AI is close to fraud, imo. The grifters who were pumping cryptocoin are now moving to hyping generative AI applications, a clear warning sign. (Sam Altman, who runs OpenAI now, is a finance guy who has also dabbled in cryptocoin.)

Those soon-to-be aired AI videos of Donald Trump saving children from a burning building will also be educational.

That’s two posts about this in short order. AI sure does seem to be inspiring you! Granted, in the same way that creationists inspire you.

raven@2–

Cell structure is not very symmetrical.

@17– I like your Voynich manuscript joke. On-topic, the link you provided illustrates exactly what’s wrong with the magical thinking behind the big tech companies current pushing AI. ‘The Voynich manuscript has not been decoded, so let’s throw AI at it!’ But there’s no understanding of what is going on, no capacity to draw meaning, and in the end the only finding they made (that the letter frequencies were similar to devowelled Hebrew) was not helpful (as they should have known if they had any knowledge of code breaking), and could have been done without AI anyway. Usually the first step in cracking cyphertext is tabulating letter frequencies, a strategy already exhausted by human codebreakers ever since the Voynich manuscript came to public attention.

Wade@13– Ye Gods! The idea that shovelling more data will allow general intelligence to emerge stochastically is a disturbing delusion. This is especially troubling given that on current projections, AI projects will run out of good training data within the next few years. I am also concerned that this philosophy is setting up AI to be a huge significance-fishing operation without the handlers being aware of it. Or worse, being aware of it but seeing a market opportunity in laundering bad data analysis.

I can’t imagine the company I work for allowing any AI generated material to be released without review and editing. That feedback may become training for the AI to get in better, but nothing would ever ship without editorial, engineering, marketing and legal approval.

Am I the only one seeing transcriptions in red of some of the words from the illustration overlaying your text (on the main blog page, not when you click through)?

It does make it far more understandable though. I can now tell the Palanciariortiant from the Poontelemen ectler sem arens. And I never could have guessed from the blurry labels that the structure on the bottom right is the Ainteenof Dentheelicge merancepte.

It all makes sense now.

I’m pretty sure right at the centre there it says ‘Parmesan’: this may be one of my cells.

Oh my Gawd!

The Lodᗴv̷a̷evan fee’is𝔳n!

It’s… so beautiful!

That subreddit is fun. Here’s another thread for the interested:

https://www.reddit.com/r/labrats/comments/1atjkp7/major_advances_in_therapies_of_bone_metastases/

I’m getting a map of an alien world from some kind of video game.

cartomancer, if you haven’t already come across this webcomic, it’s worth checking out.

Trust me: https://killsixbilliondemons.com/

[artist — an actual person, quite gifted, Abbadon — gets carried away, sometimes. Lotsa zooming required.

It is quite marvellous in my estimation]

A fairly accurate rendering of a typical eldritch abomination.

Yeah, these “advanced chat bot” things have no capacity to self check, they are like a 2 year old telling a story, but worse, since the two year old at least “knows” they are making things up. The few people I know of that use them to generate art are not having the “AI” make the art, then just using that, they iteratively run it, starting with some baselines images, like one guy that does super hero images based off his wife. They then correct obvious visual errors in the art, feed that back in, with some added text to “adjust” what sort of details are added, like if they want the costume to have mechanical elements, or high tech parts, etc., then make more corrections, then feed that in with a few minor additions to the text, etc., until they are satisfied that the result is something they like. This madness from above looks like one that has literally been feed a request once, produced utter disaster, and then posted as an example of both a) how wrong it does on its own, without being carefully corrected in multiple passes, and b) how “not” to use one, ever.

Oh, wait, I recognize this: It’s the alien dimension from X-Com: Apocalypse. Gotta infiltrate all the biological “buildings” to wipe out the alien menace.