Some mornings, when your alarm clock fires off, you just roll over and slap the “snooze” button. If you do that long enough, you can get quite good at it; there have been mornings when I hit the “snooze” button 15 or more times in a row, pushing back my wake-up time by as much as 2 hours. I used to know someone who claimed that they could sleep-walk through their morning status meeting, effectively grabbing several extra hours of sleep.

I love sleep and I consider it a positive activity – an outright pleasure – but sleep that is interrupted every 9 minutes, sucks.

In a nutshell, that’s modern computer security. If I had a dollar for every time someone from the press has asked “is this a wake-up call for cybersecurity?” I would have gotten out of the field a lot earlier, with a few thousand bucks more in my bank account. If any of these things are wake-up calls, the consumers of computer security are the customers who immediately grumble, roll, over, and slap the “snooze” button. And, they’ve been doing it for decades. During those decades, the situation has gotten dramatically worse, but who cares? If you’re not going to fix the basic problems that made you hit the “snooze” alarm in the first place, you’re never going to fix the advanced ones. Computer security – especially in the government – has not proceeded in “fits and starts” or “hiccups” – it has been a constant push toward denial that there is a problem, studded with occasional fads that will save us (AI being one of the current ones) none of which will work but all of which will transfer a lot of money into the hands of vendors.

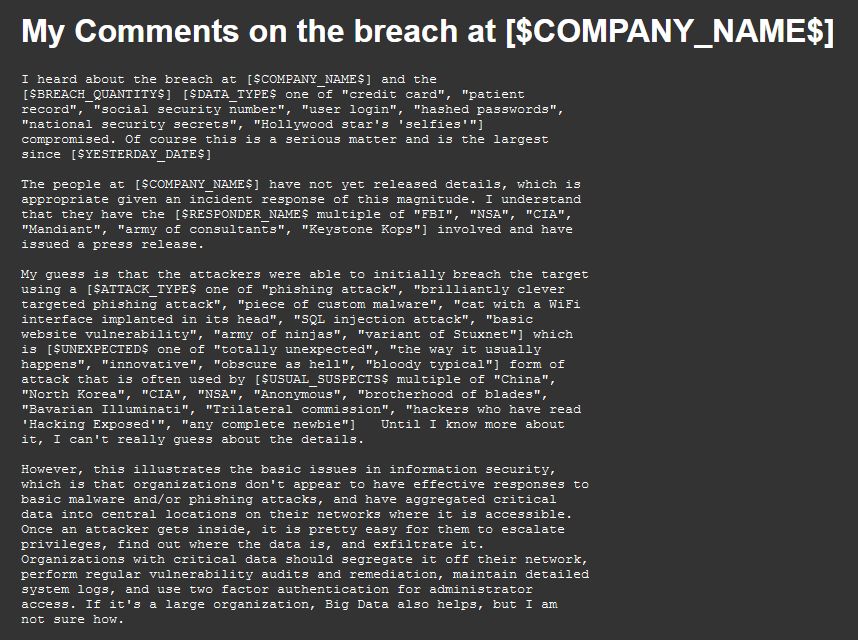

Back in 2015, I was still on the speed-dial for a number of journalists, so when there was a big breach my phone would ring. When Anthem announced that they had managed to leak the personal data of nearly 80 million people, I got phone calls asking for quotable quips and, perhaps, some recommendations. “Is this a wake-up call?” My response was:

I actually did learn something from the Anthem breach; it made me think a bit about metrics, and I realized that counting numbers of millions of data items breached was a bad metric. It doesn’t mean anything, and all it shows is that the media doesn’t understand the problem. But, after more thinking about it, I realized that it also means that computer security practitioners don’t understand the problem, either. I began to think about the question of computer security strategy, realized we didn’t have one, and decided to get out of the industry and be a blade-smith instead. I spent 35 years or so leading horses to water, and eventually, I realized that horses are much smarter than computer security customers because they drink when they need to.

I tried; I really did. Back in the late 90s, I tried to encourage the industry to recognize that general purpose computing is a problem. If it’s general purpose, it’s a computer that’s designed to run whatever you want on it, and that means malware will also run on it. There were basic recommendations I tried to promote, such as the fact that we need to scrap our entire software stack and re-design it – to be better and more reliable and to no longer require system administration. Unfortunately, by 2003 or thereabouts, the industry was shifting the opposite direction – instead of making systems that were better and software that was more reliable, the industry made systems that were self-updating. In other words, software became a moving target, configuration control went out the window, and instead of being expected to occasionally swallow pellets of shit, we got a full-time uncontrolled shit-stream that everyone just accepted because there was no alternative. If you don’t like Intel processors or Microsoft Windows or whatever the shit from Apple is called, what are you going to do? None of us are interested in spending the time to understand what we’re running and we can’t do anything about it, anyway.

By 2005, the global software ecosystem had become a self-updating web of interdependent, incomprehensible, glarp. Around that time, China proposed to the US and Russia that maybe it would be a good idea to build some frameworks for international conventions regulating government-sponsored hacking. The Bush administration was completely uninterested in that, because at that time, the US was top dog on the internet (still is!) and enjoyed being able to hack the whole planet. After all, when you have Oracle, Facebook, Twitter, Microsoft, Apple, Intel, Western Digital and Seagate backdoor’d and in your back pocket, you have the keys to the kingdom; why would any government in their right totalitarian mind give up a capability like that? Besides, “cloud computing” was starting to take off, and it was looking more and more like everyone in the world would give up their ability to control any of their systems because system administration is hard and hard things are expensive and who wants to do that? The US was looking at total dominance of the game-board of cyberspace and had no interest in reining itself in – besides, it is increasingly apparent that the US intelligence community will not allow itself to be reined in.

That’s almost all for the history lesson; there’s one more piece. After 9/11, the US Government threw a ton of money at computer security. I was involved as a “Senior Industry Contributor” in a special panel put together by NSA to recommend what the government should do going forward. The rest of the panel were smart, important, industry figures and the whole experience was interesting and very weird. I realized that the government’s response to the “intelligence failure” of 9/11 was going to be:

- “Whee! A system upgrade!”

- Develop a bunch of offensive tools

I’m not kidding. The FBI, for example, spent a massive amount of the homeland security budget giveaway on buying everyone new laptops and desktops. To be fair, the flint-based bearskin-power computers FBI had were pretty bad. I recall that my minority opinion brief at the panel was the first time I used the formulation: “If you say that a government agency’s computer security is a disaster, and you just throw money at it, all you’ll get is a bigger, fancier disaster.” I was more right than I could possibly have imagined in my worst nightmares. Then there was the matter of offensive tools. Hey, let’s play a game! I’ll put a number below the end-bar and that’s the percentage of government computer security dollars that the intelligence community spent on offense versus defense. Let’s suppose there’s a classified number of billions (around 30) of dollars being spent on cybersecurity – how much of that (once you subtract the “new laptops for everyone!” dollars) was spent on offensive capabilities? Guess. Just for fun.

There were computer security strategists, such as Dan Geer, who tried to encourage the government to see this as a problem that will evolve in a multi-year time-frame. Good infrastructure needs to be designed and populated and kept safe and separate until such a time as we can solve the problem of connectivity with safety, or management with trust. That was an unwelcome message compared to “wow, unlimited elastic storage at amazon cloud services!” and “it’s only $3bn!” so massive amounts of sensitive stuff moved to unknown places and big software frameworks got rolled out, and nobody thought about it as a strategic problem involving lock-in and governance. I’ll give you one hint to the puzzle I posted above: the $30bn in cybersecurity spending post 9/11 was not spent building a government-only elastic storage system; the storage dollars went to Amazon and Microsoft.

There were computer security strategists, such as Dan Geer, who tried to encourage the government to see this as a problem that will evolve in a multi-year time-frame. Good infrastructure needs to be designed and populated and kept safe and separate until such a time as we can solve the problem of connectivity with safety, or management with trust. That was an unwelcome message compared to “wow, unlimited elastic storage at amazon cloud services!” and “it’s only $3bn!” so massive amounts of sensitive stuff moved to unknown places and big software frameworks got rolled out, and nobody thought about it as a strategic problem involving lock-in and governance. I’ll give you one hint to the puzzle I posted above: the $30bn in cybersecurity spending post 9/11 was not spent building a government-only elastic storage system; the storage dollars went to Amazon and Microsoft.

Now let’s talk about SolarWinds.

It’s just another management framework for computing. Like many, many pieces of software, it includes an update capability that allows the manufacturer to push out fixes to their code, which get installed without the customer understanding what the fixes do. That makes sense because the customer can’t really do anything, anyway – it’s not as if they have the source code or the engineering capacity to fix flaws in the software, and there’s so much software. Everything nowadays is crap, so the manufacturers need to update their crap because customers won’t take the time to install patches and nobody understands them, anyway. Imagine if the entire computing ecosystem took a look at software maintenance, and shrugged. SolarWinds is useful – it helps mere humans understand what is going on in the networks that they built; networks they don’t understand. It’s all incomprehensible. On top of that is Microsoft Windows, which has its own massive ecosystem of flaws, which runs more software that also has massive ecosystems of flaws. Things appear, things disappear, nobody’s job is to manage that and nobody could do anything about it even if they wanted to.

In that environment, someone executed a transitive trust attack. A transitive trust attack is:

- When A trusts B, and B trusts C, A trusts C and usually doesn’t know it.

The entire software ecosystem is one great network of relationships, virtually any of which can be lashed into a transitive trust attack. When you install the device driver for your graphics card, your Windows desktop system checks the signature on the driver and allows it to run in kernel space, with complete unrestricted access to system memory, the devices, and the CPU. But who wrote the driver? Possibly a consultant. Possibly the manufacturer. Possibly, a spook who works for the NSA. Who knows? Nobody knows. And the chain does not have to stop at ‘C’ – what if the programmer at the vendor who is writing the driver decides to use some XML parser code from some open source software repository. Do you think they read through the parser code and check for backdoors? Nonsense. Now, you have a user running software on their computer that was written by some rando coder on the internet, and the user, Microsoft, and even the management team at the graphic card manufacturer – none of them have any idea. Or, what if someone hacked the graphic card manufacturer’s systems and got the credentials of a developer and added a bit of code somewhere in the hundreds of thousands of lines of code that are coming from who knows where? This is not a system that’s ripe for a disaster; it’s an actual disaster, just nobody has suffered, yet.

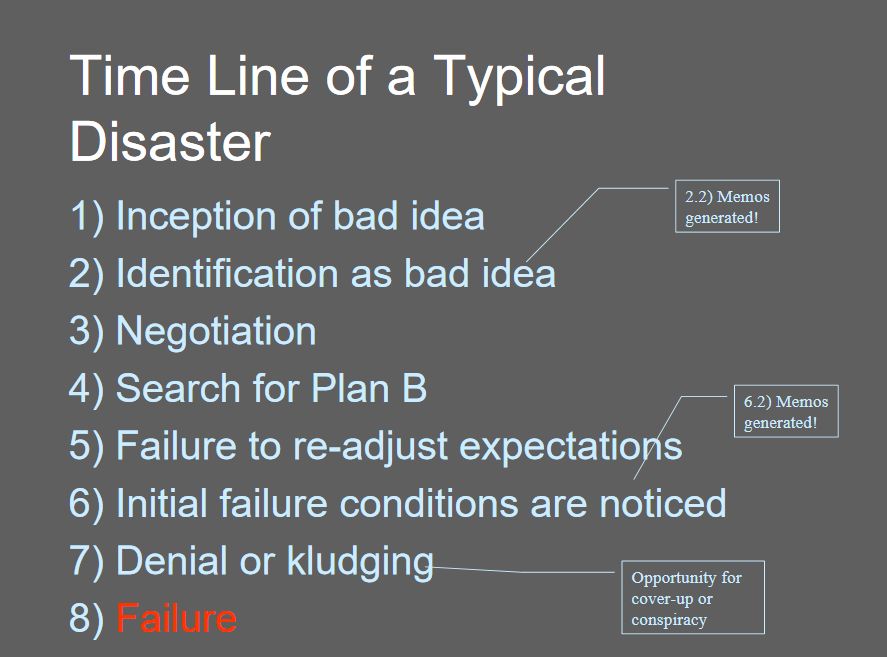

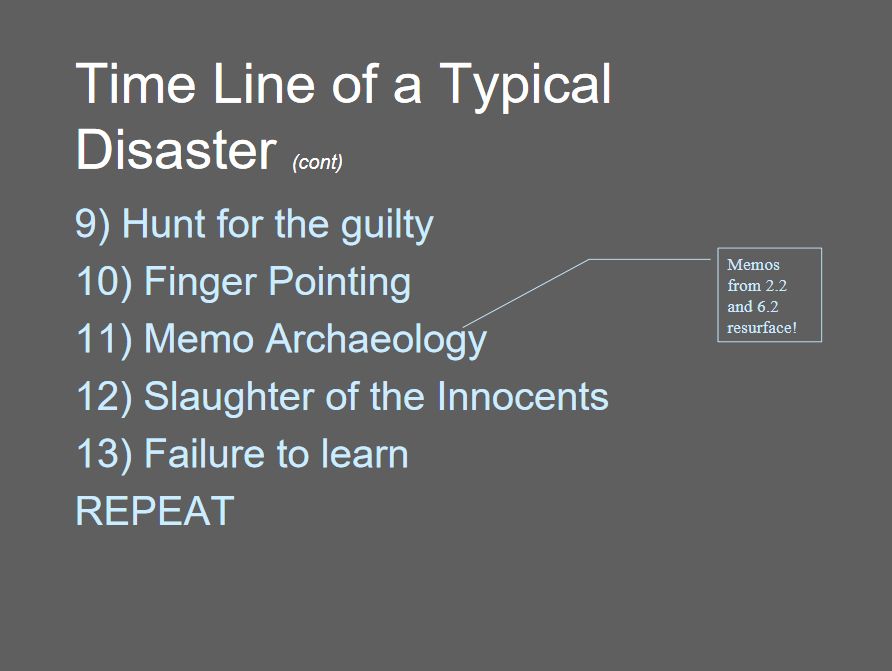

I did a paper back in 2013 called The Anatomy of Security Disasters [ranum.com] in which I hypothesized that we don’t call a thing a “disaster” until much later on the time-line than we should. The “disaster” started years before it blossomed; it’s just that nobody recognized it. Security practitioners are struggling to deal with the disasters that happened in the 1990s, when networks grew willy-nilly and everything connected to the internet – they’re not even dealing with the fully-blossomed disasters of “cloud computing” or “automated patching” and “the internet of things.” They’re doing a shitty job dealing with the basics: uncomprehended growth and internet connectivity, hoo-boy just you wait until they come face-to-face with the next generation of disasters. SolarWinds is one of those next generation disasters, and the response is going to be to grab those worms that got out of the can, hammer them back into the can, duct tape the can top back into position, and hunker down waiting for the next one.

The US has built Kant’s internet: the internet that the US wants to live in. Unfortunately, they didn’t follow the categorical imperative; instead they just built transitive trust and backdoors into everything and assumed, stupidly, that nobody would ever deploy goose-sauce on a gander.

So, the details are now predictable: someone put a backdoor in SolarWinds, SolarWinds pushed it to all of their clients – hundreds of government agencies and thousands of large corporations – and all of those systems are now compromised. How do you fix that? Well, in the Anatomy of Security Disasters paper, I point out that some disasters require a time machine to fix; they are unfixable, because we only discover them when they are fully-blossomed. What’s going to happen with SolarWinds? Incredibly, Microsoft (which was also compromised in the attack) pushed out a patch update to Windows that disables the compromised version of the SolarWinds agent. In other words, Microsoft said, “We can put that fire out! We’re the premier seller of gasoline, after all!” Microsoft pushes stuff out to millions of machines all the time, and if you want to believe that the US government has never tried to get Microsoft to push something dodgy in their patch-stream, you don’t know the US government very well.

Since Windows’ kernel tries to do so many things, one of the things it does badly is security. Microsoft tries, but basically they can’t get around the transitive trust problem; they need to support so many device drivers, from so many places, that it’s impossible to know the provenance and quality of all the code. Same for Apple, for what it’s worth, though Apple has done a better job of lip-servicing security (mostly, because Microsoft has shit the hot tub so hard, so often). Meanwhile, Cisco, which has also had vulnerabilities and backdoors, pushes devices that are so critical to infrastructure that companies are reluctant to patch them for fear of down-time. Do you want to thoroughly own the internet? Go work for one of those companies. While you’re getting stock options and free espresso as a developer, you can put a backdoor in any part of the system that will be forward-deployed. Wait a few years for it to propagate and find its way into critical systems, then sell it. Be careful: the people who’ll want to buy it can play rough. One of the programmers who worked for me at NFR went on to work at Intel as a consultant for a while, because he had implemented our super-amazing packet capture code in which we got the Intel etherexpress chip to DMA captured packets into a set of ring buffers that were allocated in process memory. There was a single pointer that the etherexpress flipped back and fort to tell which was the active ring buffer, so the process only had to check one memory address in order to collect incoming packets – no copying, no nothing. I talked to them 2 years later and they said, “you know, I could code a couple mistakes into the driver and nobody’d have any idea they were there, but I could wait a few years then sell them for a whole lot of money.” I’m sure that the idea has occurred to others.

Let me summarize, the current computing environment is a castle made of shit. The roof is shit holding together straw. The walls are straw-bales and a few rocks, held together with shit. The interior is sculpted out of shit. The foundations are massive blocks of hammered shit, and the under-works are dug deep into bedrocks of shit. The walls of the castle bristle with shit-cannons that fire balls of explosive shit, but nobody really knows who controls the cannons because the management interface for those cannons is – you guessed it – pieces of toilet paper smeared with shit. Anyone can put their own shit in there and take over control of the cannons, but the US government agency responsible for homeland security likes it that way because they might need to jack up the Iranians, who designed their shit-castle using US shit-castle technology. The soldiers that patrol the battlements wear armor made of well-polished shit, with swords that look like damascus steel, but actually it’s laminated horseshit and diapers – they are morons who have been trained to obey orders instantly without a thought, which means that anyone who tells them to stab themselves in the nuts, or open the gate, will be instantly rewarded with compliance. “Do you want fries with that?”

SolarWinds is a big deal, but only because it’s the name that’s written on the shaft of the arrow that has been stuck through the software industry’s heart for years.

Approximately 80%. We’ll never be able to know the exact number, though.

I remember reading that the way to put out a fire in a cotton bale is gasoline, and I have real trouble believing that. I’d assume that the way to put out a fire in cotton bales is to drag them into a field and wait for rain and fungi to deal with it. It might take a while but gasoline seems like an expensive and risky way to magnify the problem. Perhaps I need to research this topic.

A few years ago at a conference (tequila was involved) I had a really nihilistic idea for a business that would make a fuckton of money by trying hard not to solve the internet security problem. I discussed it seriously with my friends who were there and we all concluded that it absolutely would work, it’d require a totally charming sociopath to serve as “front man” (e.g.: a Steve Jobs or Elizabeth Holmes) and an up-front investment on the order of $10mn, which is basically nothing. If it worked, it would be worth hundreds of millions, really quickly. And by “work” I mean “get people to throw money at it” not “actually solve problems” – basically, computer security’s F-35. I don’t know if I should do a public write-up about the idea or not.

My friend D.B. Just sent me a link to an excellent write up from Microsoft on how the SolarWinds DLL attack worked. [Note: DLLs and run-time linkable code were Microsoft’s brilliant idea] [microsoft] They even got in a plug for Microsoft Defender; an excellent technology for locking barns after horses have departed them.

The fact that the compromised file is digitally signed suggests the attackers were able to access the company’s software development or distribution pipeline. Evidence suggests that as early as October 2019, these attackers have been testing their ability to insert code by adding empty classes. Therefore, insertion of malicious code into the SolarWinds.Orion.Core.BusinessLayer.dll likely occurred at an early stage, before the final stages of the software build, which would include digitally signing the compiled code. As a result, the DLL containing the malicious code is also digitally signed, which enhances its ability to run privileged actions –

and keep a low profile.

A slow-blossoming disaster, indeed.

It sounds like the US is where the UK was in 1946.

In 1936, Brittania still ruled the waves and was technologically in front, but was being challenged. Then war forced them to share technology (read: give away the keys to the castle) with not just the US but also the Soviets in order to win the war. Then the Soviets took whatever they could and ran with it, and US mass production made UK industry irrelevant. By 1956 as the Cold War heightened, the UK was a fading power and hoping to stay relevant by staying friends with the US.

Unlike the US trusting China, nobody made the mistake of trusting the Soviets. Not even when they were “allies”.

So….

Are (just to pick an example) iPhones any more secure than MacBooks or Windows computers? There is a more tightly controlled software ecosystem, it seems to my uneducated perceptions. And that seems like it would make it “more” secure. But the problem of “verified” code which is verified by someone who hasn’t read all the code line-by-line probably still exists.

Imagine a bullet proof vest whose armored sections only cover the sides of the body, not the front or back of the torso. One could speak of a person wearing such a vest as “more” secure than not wearing anything at all, but that might be more misleading than true despite being (potentially) technically correct. In particular, if the perception of safety is increased faster than actual safety, it is possible that even a positive (if modest) step toward increased safety (dyneema-fiber covered sides) would increase the total rate of injuries if that perception led to reckless overconfidence.

This is all a metaphor because this isn’t my field and I’m sure I don’t really understand what you’re saying, but I’m trying to do so.

I guess the question comes down to this: is any consumer system “more safe” than any other in the current environment? After all, I’m not suddenly going to become a computer security expert at this point in my life. Am I doomed to simply accepting this state of affairs until people like you make a dent in how computer operating systems secure data and machine functions? Should I be doing anything other than including “not clueless on cybersecurity” on my list of voting criteria next election?

Only 80%? Things aren’t as bad as I thought…

Crip Dyke: I’ll leave it to our gracious host to answer your principle question (although I think I have pretty good idea of the answer), but I would like to address one point you obliquely raise… You can’t verify code by reading it. I don’t care how good you are, it’s just too hard. We simply don’t know how to write software in a way that allows us to verify that it only does exactly what it should and no more*. Hell, we don’t even know how to adequately define “exactly what it should do” in the first place. About 50% of my working time is spent writing tests to prove that the code I’ve just written actually does what I think it does, but that only deals with individual pieces in isolation, and doesn’t come close to covering all possibilities. Once you start assembling software systems, the potential for unexpected behaviour grows geometrically. Plenty of very serious security vulnerabilities have turned up in code that lots of competent people had looked at very carefully, and were all convinced that it was fine. And that’s just honest mistakes and careless oversights, once you get very smart people deliberately looking for ways to introduce subtle vulnerabilities without them being noticed, then you really are fucked.

*Well, this probably isn’t strictly true, but nobody wants to spend the necessary effort, because it’s truly gargantuan and computing becomes a lot less useful if it takes you a year to write the specs for “Hello world”, another year to actually write it, and a third year to verify it.

So Marcus, if things are this bad, what is the worst-case scenario that we’re looking at?

If it is “Privacy is dead, and everybody from the Chinese to my mother will soon be able to access everything from my trade secrets to my to my medical history to my porn search strings” — well, I think many of us were resigned to that already.

But if it’s “Whoops, we all wake up one morning to discover that terrorists have taken down the internet for good and all the money that was stored in computers connected to it has vanished”…that’s a bit more nighmarish.

With 1 being Bladerunner “gritty, but stylish” and 10 being The Postman “collapse of civilization,” how bleak an apocalyptic hellscape are we looking toward?

IOW, it is possible to build a better internet, without those flaws.

Being possible, it follows that whoever first builds and employs it should have a significant advantage, no?

Sadly, that’s not the case. At least, not immediately, which actually means never.

First of all, it will not reach the level of functionality of the old one any time soon, so there will be no reason to switch. Basically, if it doesn’t have Facebook, people would not want to switch; if it has Facebook, you’ve failed.

But more importantly, why pay for it, when the old Internet works fine? Until the old Internet breaks, there’s no business case for the new Internet. And then, it gets patched, those who lost or died or got shafted suck it up, and it keeps muddling on.

No company (and no political entity) wants to do things right. They want to do them sooner than the competition and well enough that they get enough market share, and sturdily enough that they last, ideally, until the week after the founder’s stock options mature (or until they quit politics and become consultants for the industry). It works now, and soon enough the system will be in that ideal state, i.e., someone else’s problem.

Crip Dyke @#2:

Yes. On my PC, I run Linux Mint as operating system, with Cinnamon as a Windows-users-friendly desktop environment, and e.g. LibreOffice as an alternative to MS Office.

.

You can’t defend against state-run attackers, but they probably are not interested in you anyway. You can raise the expense needed to infiltrate your systems significantly, though.

.

Mint/Cinnamon can be tested with a bootable USB stick, without altering your current setup.

.

/e/ is an operating system for more secure mobile phones. You can get it pre-installed.

.

More expensive: Librem laptops and phones from Purism.

To Crip and others

Computer security in today’s world, like physical security, can best be thought of shades of gray, instead of “secure” or “not secure”. The goal of physical security is to raise the costs of attackers enough to dissuade attackers. However, there’s always going to be some attackers who will find it worthwhile for a big enough reward.

I refer you to the many other excellent posts in this thread. Let me make one further observation.

Under a “secure” or “not secure” model – the short answer is you lose. Nothing is secure, because the physical hardware chips that we run on are not secure. Every modern computer has a hardware backdoor built into it that you cannot do anything about, including all modern Intel CPUs (search “Intel Management Engine”, AMD CPUs (search “Platform Security Process”), and all modern cell phones (the “wireless / modem chip” which is a separate processor from the CPU which runs the operating system, and this “wireless / modem chip” has full access to main memory). You cannot do anything about a hardware backdoor, and Intel, AMD, and others have gone to a rather large amount of cryptographic effort to ensure that you cannot disable these backdoors, even with a fine soldiering iron and lots of skill. (Then there’s also updateable CPU microcode, another absolutely horrible idea from a security standpoint.)

Every modern computer has a hardware backdoor built into it that you cannot do anything about, including all modern Intel CPUs (search “Intel Management Engine”, AMD CPUs (search “Platform Security Process”), and all modern cell phones (the “wireless / modem chip” which is a separate processor from the CPU which runs the operating system, and this “wireless / modem chip” has full access to main memory).

The hard drives firmware is also backdoor’d. Nobody has yet disclosed how it works but presumably the drive can DMA a payload into system memory when triggered to do so. *poof* all your ring zero are gone.

Having multiple backdoors means we cannot block any one or two or five of them because they can just come back in using pathway six, then turn one and two back on.

An international security researcher I used to sometimes talk to freaked out a few years ago, when he visited the US and discovered that the BIOS of his laptop had changed between when he came into the country, and when he returned to his hotel room. Was code installed into the BIOS by FBI agents who flashed a badge to the front desk and popped it with a USB stick, or did some signal trigger an NSA TAO backdoor that updated the BIOS? Never found out. He reset the BIOS (after copying the bits, which I still have somewhere – I suppose if I cared I could see if it was Vault 7 stuff or something related) the next day he got back to his hotel room and the BIOS had changed AGAIN. I assumed after that that it was probably an FBI black bag job – those guys are not smooth.

There are also laptops and keyboards that have been found to have small microprocessor packages installed in them. Now, such things are available off the shelf:

https://shop.hak5.org/products/o-mg-cable note: I believe that everyone should drop devices like that on law enforcement and corporate executives’ machines. Then never go back to harvest the backdoor, just let them find it, realize it’s been there for years, and freak the fuck out. If I had the millions I’d give stuff like that to everyone: go ahead and backdoor some shit, let them see how they like it. I know for a fact that there are heroes who have gone under cover to drop backdoors in various cop departments.

I’ll try to make some comments where I think this will all end up, but it really ruins my mood.

cvoinescu @6:

No, the internet is an implemented protocol, not an application. Facebook is not comparable.

(https://en.wikipedia.org/wiki/Internet_protocol_suite)

More to the point, the reason would be one where “fortunately, they follow the categorical imperative instead of just building transitive trust and backdoors into everything and assuming, stupidly, that nobody would ever deploy goose-sauce on a gander.”

As for whether it would be worth paying for it, how much is security worth? ;)

John Morales @ #10:

The Internet is not merely its transport protocols: that would be a wilful misunderstanding of the scale of the problem. Applications are very much part of it. And you know very well I meant Facebook metonymically, as a shorthand for the morass of functions, applications and services we all use.

How much is your security, or mine, worth to the people with the purse strings? My point exactly.

I take back the link in “Applications are very much part of it”, as it does not help my argument any more than John Morales’ own link does. The point still stands.

cvoinescu:

How much is their own security worth to the people with the purse strings?

PS

implemented protocol.

That includes the hardware, the system software, its funding and commercial dependencies, and so forth. IOW, what we actually have, what Marcus decries.

Application programs and end-user interfaces needn’t change; e.g. since Facebook can operate in the existing internet, it can operate just the same under a new, more secure internet. Because it’s still an internet.

John Morales@#14:

. since Facebook can operate in the existing internet, it can operate just the same under a new, more secure internet. Because it’s still an internet.

What if the system’s operative design is flawed? If you have a “secure” internet, and FB’s model is to allow plugin executables from vendors (without a security model) then the quality of the internet or the quality of facebook are irrelevant – it’s unsafe by design.

Put another way – Windows’ device driver model could be perfectly implemented and it would still suck.

In order to build a “secure internet” there would need to be agreed-upon primitive operations that were safe enough, and those operations would have to be zealously guarded with dragons and guys in armor and stuff. An example of this is when Java and web programmable stuff was coming down the pike; some people suggested a sort of “web kernel” that contained operands that had controls that could be enforced by the end-point; then it doesn’t matter what programming language you stick on top that makes those kernel calls, the controls are common. Instead we got Jim Gosling’s elevator panel programming language and Microsoft said “hold my beer” and dusted off an old BASIC interpreter and dropped that into browsers (and many other things) – an interpreter with a complete kernel call capability. I.e.: the controls were on the wrong end of the pipe.

Doing what I am talking about would have been hard and probably pointless because immediately there would be sustained attempts to violate the kernel model and “extend” it with dangerous new operators.

Ultimately programmers will not settle for less than the ability to not just shoot themselves in the foot, but in the face, the ass, the groin, and also shoot the guy in the next cube over. It’s kind of like asking republicans to mask up. Programmers be like: “no I need to be able to code infinite loops, I don’t care if this is running under an execution operand count, I’m a good programmer it’ll be OK”

Someone, I forget who, suggested that downloadable code should only be able to create virtual filesystems that were divorced from real filesystems and that the user could specify when they are destroyed: window exit, space limit, non-use for a certain time, etc. But it was as though the guys who were going to code spyware in a few years said “oh no I see what you’re up to, you cannot block my penis enlargement ad!” Sigh.

If we had a secure internet in today’s terms that would mean that Facebook’s coders had specified a reliable system in which it was impossible to block ads. Because ads is the internet, to them.

To Marcus

I sometimes fantasize about fixing the hardware problem. I think there could be great strides to fixing it, but of course it’ll never happen, and I agree with your prognosis about the probable end-game of security being just good enough to keep things running, mostly, with regular security breaches.

Let me ramble for a little about my fantasy. In my fantasy world, there’d be government regulations that would split all general purpose computing devices (personal computers, internet servers, smart phones, etc.) into two categories: core and accessories. The idea is that the core contains the CPU, main memory, the main bus, and a few USB controllers. Everything else, including graphics card, hard drive, etc., must be connected through USB, and then lock that USB interface down tight to ensure that the core can protect itself and protect its security from a malicious accessory connected via USB.

As a regulatory rule, the core must be kept as small as reasonably possible, while still containing the CPU, main memory, the main bus, and the secure connectors (e.g. a connector like USB which would allow connecting to a malicious accessory without compromising the system, e.g. no unrestricted direct-memory access (DMA)). As a regulatory rule, the entire core must contain as little code as possible, as all code must be stored in write-once read-many memory. No writeable memory in the core. No updatable bios. No updateable CPU microcode. (Obviously no Intel Management Engine or AMD Platform Security Processor either.) I intend for a design where the entire boot-up sequence is stored in a USB stick that you plug in. The core contains write-once read-many firmware which reads from the write-many USB stick in order to initialize whatever else needs to be initialized.

No wireless capability of any kind is allowed in the core. This lesson brought to me by Shadowrun. Further, the core must have the power to immediately power down any malicious accessory whenever the core wants.

Then, everything in the core must be open-source, including that read-once write-many firmware, and the CPU and other hardware VLSI files must be shared.

Then, figure out some way to hinder efforts at sneaking in backdoors that are in the CPU which do not appear in the VLSI files. Basically, I imagine a top-secret level of security at the actual CPU foundries. (Aside: Can we make a computer chip that is powerful enough to run actual modern CPU foundries that uses such wide circuitry that we could verify the circuitry visually via a powerful microscope? The chip that runs the modern CPU foundries doesn’t need to be cutting edge. It could be dirt slow, but verifying the correctness of that chip visually could be pretty useful. Maybe something like the hobbyist circuitboards that one can print on paper that I’ve read about recently.)

Then, we’re left with a trusting-trust problem, but I think that could be solved in the old-fashioned force way.

I imagine that in this world, CPU manufacture and core parts manufacture becomes nationalized, or done via a consortium of countries, and not done by for-profit companies.

For smartphones in particular, the modem chip that also does hardware encoding decoding is ok as long as you don’t give it full unrestricted DMA. Again, the key is to allow it to connect to the core only through a secure connector. Maybe that means partial DMA is ok, e.g. the modem chip only gets DMA to this specific region of memory and that’s enforced by hardware, not software. A proper operating system could protect itself against a malicious modem chip who only has DMA to a certain hardware-enforced region of main memory. Sure, you might not trust whatever communication you send in or out via the modem chip, but toss on encryption, and this would still be a large improvement.

End fantasy.

PS: Then we’d need to adapt a proper operating system model where device drivers are not running in kernel mode, e.g. isolate them with the standard hardware ring zero ring three whatever protection schemes. Again, partial DMA to a device driver can be ok so long as the operating system can enforce the limited DMA against malicious device drivers.

PPS: I recognize that this would still be a herculean effort to maintain, but it appears to me that this is what must be done if we want to be serious about computer security. Am I on the right track though? What do you think?

PPPS: Of course, we would require by law that all device drivers would be open-source, and the API between the device driver and the device be open-source, and that the accessory maker would be obliged to write an open-source driver for an open-source operating system which adopts the proper isolation of device drivers aka device drivers don’t run in kernel mode. Of course, add another rule that the reference open-source non-kernel mode device driver for the reference open-source operating system must not have inferior functionality compared to device drivers released for other operating systems.

Yes, I recognize that this would prevent Hollywood’s attempt at stopping users from streaming high-def videos from the internet and saving the rendering of the GPU to a video file. That’s precisely the kind of thing that shouldn’t be allowed at the hardware level. I might need more rules to prevent that sort of shenanigans too. I don’t know. I’m just a rank amateur at this stuff, and I know that my foes are wily and sophisticated when trying to create shitty protections for their copyrighted stuff.