The Second Law of Thermodynamics says that in a closed system the entropy will either stay the same (for reversible processes) or increase over time (for irreversible processes). The entropy of a closed system can never decrease, except for short-lived random fluctuations. But an open system that can interact with its environment can have its entropy decrease.

The word entropy can be understood in in several ways and one such way is in terms of order. Highly ordered systems have low entropy while highly unordered systems have high entropy. So the second law can also be stated as the tendency of closed systems to move towards increasing disorder.

All this is pretty well known, confusion arising mainly due to the willful obtuseness of some religious people who ignore the distinction between open and closed systems to argue that evolution violates the Second Law, since evolution seems to produce more order. But this is possible because the Earth is an open system that interacts with the rest of the universe, most importantly the Sun.

Less well known is that there is a negative correlation between entropy and information, where a decrease in information leads to an increase in entropy and vice versa. Seen that way, the relationship between entropy and order becomes a little more transparent since we are likely to have more information about ordered systems (that have less entropy) than about disordered systems (that have more entropy).

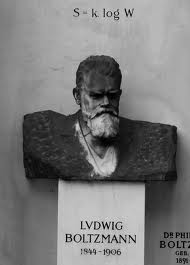

To lift this out of a hand waving level of argument and into more rigorous science, we have to quantify the concepts of entropy and order so that we can measure or calculate them and establish the appropriate relationships. This has been done over many years, ever since Ludwig Boltzmann identified the key relationship between entropy S and an order parameter W written as S=klnW, where k is a universal constant known as the Boltzmann constant and ‘ln’ is the abbreviation for ‘natural logarithm’. (This equation appears on his tombstone.)

To lift this out of a hand waving level of argument and into more rigorous science, we have to quantify the concepts of entropy and order so that we can measure or calculate them and establish the appropriate relationships. This has been done over many years, ever since Ludwig Boltzmann identified the key relationship between entropy S and an order parameter W written as S=klnW, where k is a universal constant known as the Boltzmann constant and ‘ln’ is the abbreviation for ‘natural logarithm’. (This equation appears on his tombstone.)

The work on information theory came later and was pioneered by people like Harry Nyquist, R. V. L. Hartley, and Claude Shannon, who were all involved in telecommunications engineering where loss of information in transmission along telephone wires is an important consideration in designing such systems.

The key insight was that of physicist Rolf Landauer who showed that the irreversible loss of information by the erasure of a single bit of information resulted in heat in the amount of kTln2 being released, where T is the absolute temperature, something that is now called the Landauer Principle.

Shannon warned against the glib use of these terms to arrive at spurious conclusions by conflating scientific terms with their vernacular use (the kind of thing that Deepak Chopra does), saying in 1956:

“I personally believe that many of the concepts of information theory will prove useful in these other fields—and, indeed, some results are already quite promising—but the establishing of such applications is not a trivial matter of translating words to a new domain, but rather the slow tedious process of hypothesis and experimental verification.”

This idea of ‘losing’ information is an intriguing one. Suppose I write a grocery list on a piece of paper. If I then erase it, I have ‘lost’ that information. But how have I actually increased the entropy of the universe by doing so? Sean Carroll has a nice post where he discusses in a non-technical way how the irreversible loss of information (such as by erasing words on a piece of paper) leads to increased entropy.

The issue of when information is irreversibly lost becomes trickier when you include the human mind in the system. If I commit the grocery list to memory before erasing it, is the information irreversibly lost, since I can in principle recreate the list? As far as I know (and I am not certain of this since I haven’t done the detailed analysis), as long as the information remains in my mind, the information is not irreversibly lost but as soon as I forget it, it is. In order to do the calculation of the change in entropy due to the act of forgetting, we have to know the detailed structure of my brain containing the list information and after again I have forgotten it. Since the brain with the list is presumably more ordered than the brain without it, then forgetting results is a loss of order and an increase of entropy.

Of course the act of committing the list to memory and physically erasing the words on the paper also involves the expenditure of energy and has entropy implications. Erasing the words on the paper involves the irreversible transfer of muscle energy into heat and that too results in a increase of entropy that is separate from the entropy changes due to information loss.

I’d have to see the math for it to make sense, but information and energy/entropy only seems to work as an analogy, not as a real physical phenomena (except as you noted towards the end there).

More importantly, if you know have all the items from the list, and they are on the counter in front of you, arranged in some manner that would make it easy to re-create, what does that mean for the relationship between information and entropy? Consider that there are multiple ways of arranging the items easily now that you have them, so does that increase the information?

http://www.nature.com/news/the-unavoidable-cost-of-computation-revealed-1.10186

@ Crimson Clupeidae

It’s more than an analogy. The notion of “ease of remembering” of a given ordered set that you’re thinking of is, very roughly, thought of as its (Kolmogorov–Chaitin) complexity.

For example, a DNA sequence “AAAAAAAA” has lower complexity and thus came from a lower entropy source than “TGUTGAAG” which has higher complexity and came from a higher entropy source.

Think of it this way, when you look at a source (say an organism) generating those codes, if the code has more complexity, you have less information about what the next letter will be. This is (roughly and in sloppy English) how the entropy/information trade off is reflected in everyday sets of objects.

What if a universe was a single compressed mass of universe, with a checksum?

OK, here’s a question. If I have some information stored in a volatile RAM chip, and then I turn off the power to the chip, the bits can theoretically drift in any direction. Some zeros can go to ones, and some ones can go to zeros, and some bits can just happen to remain in their programmed state. So the information is lost, but where is the increased entropy? The chip now holds a random distribution of bits, which seem to me to be less order than it had when I programmed it.

@1:

Landauer makes the connection, but only as a lower limit to the amount of energy associated with one bit of information. Carroll could have stressed this point.

If you have m bits, n ( m-n+1, and the change in entropy is

ΔS = k·ln2

corresponding to a change in energy (of the m-bit system) defined by

ΔQ = T·ΔS = T·k·ln2

This is all independent of what the bits are, how they are flipped, etc. It’s just a theoretical lower bound.

Ouch. My post got mangled. I’ll try again;

If you have m bits, n of which are ordered in a particular way, the number of available states corresponding to the same ‘information’ (ordering of the n bits) is 2^(m-n). In other words, the remaining m-n bits can be either 0 or 1. So the entropy is

S = k·ln(2^(m-n)) = (m-n)·k·ln2

One of the ordered bits being ‘erased’ simply means we can no longer consider it in a particular state, so m-n —> m-n+1, and the change in entropy is

ΔS = k·ln2

corresponding to a change in energy (of the m-bit system) defined by

ΔQ = T·ΔS = T·k·ln2

This is all independent of what the bits are, how they are flipped, etc. It’s just a theoretical lower bound.

@5: Less order means more entropy.

I maintain my office pretty much in a state of maximum entropy.

@#8

Oh, right! Shoot, I always get that backwards.

This is a common rejoinder to the creationist misunderstanding of thermodynamics. However, it’s wrong — because it implicitly concedes that evolution couldn’t happen in a closed system (meaning that no matter or energy enters or leaves the system). The same objection applies to similar claims about abiogenesis.

Both abiogenesis and evolution have occurred in a closed system — namely the universe as a whole, which is closed by definition. Whether a system is open or closed doesn’t really make the difference.

A better refutation is to point out that the Second Law of Thermodynamics is about total energy. It’s perfectly legal to have a local reduction of entropy, as long as you pay for it with an increase somewhere else. That’s what happens when you fill the ice trays with water and pop them into the freezer. The water undergoes a local reduction of entropy and turns into ice, which is a more ordered state of matter. However no entropy is lost — it’s just rearranged. The compressor and the refrigerant and the cooling coils in the back of the fridge conspire to extract entropy from the freezer compartment and dump it into the rest of the kitchen, along with some extra entropy that came up along the way. Total entropy is going up, even though local entropy is going down in the freezer compartment.

Something similar happens in your back yard, where some high-entropy materials — air, water, and dirt — spontaneously self-assemble into a marvelously intricate low-entropy material that we call grass, while shedding entropy in the form of heat.

Something similar happened in abiogenesis. Some organic molecules self-assembled into a more ordered configuration, while shedding some entropy into the surrounding seawater.

If the creationist version of thermodynamics were correct, then not only could evolution not occur, but grass couldn’t grow, and water couldn’t freeze. It’s that wrong.

The contribution of the sun is to keep some energy gradients going for long enough that they have enough time to drive evolution. Imagine a rogue planet, or a microbiology lab, in deep space, far from any star. Abiogenesis and/or evolution could still happen, for a while, until the energy stores ran out. There may be good reasons why abiogenesis would be unlikely on a rogue planet, but they are not thermodynamic reasons. Mere nattering about the Second Law is not enough.

Actually, the thermodynamics of “forgetting”, or losing information, are more down-to-earth then one might think

Pretty cool Popular Science article gives a layperson rundown:

http://www.popsci.com/science/article/2011-06/quantum-entanglement-could-cool-computers-while-they-compute

Basically, it describes some work by physicists that predicts we’ll one day be able to use quantum technology to build computers that actually cool off as they delete data; offsetting some fraction of the heat generated by writing the data; ultimately reducing the energy cost associated with cooling computers as they churn through massive loads of data.

Hot air from Deepak Chopra also involves expenditure of energy and has entropy implications.

I would like to take issue with some of the things that you say; not because you are wrong but because you are right; however, I would not like to give creationists an inch of ground.

Putting it this way leads to the erroneous statement:

The late Dr Henry Morris -- founder of the Institute of Creation Research says*:

and:

So, the chief proponent of this spurious creationist argument is perfectly well aware of the ‘Earth is an open system’ argument:

Don’t call him ‘wilfully obtuse’; this is low cunning -- the statement is fundamentally true. If a Law is to be a Law it must have some sort of general application (they don’t get more general than this one) a Law that applies nowhere hardly counts. If I could re-write your original statement; still using the magic word ‘entropy’ that creationists love so much:

Thus the ‘isolated system’ (a purely theoretical concept) has now been put in its rightful place -- inside the Law which now has almost universal application.

Because the Second Law is so precisely obeyed and so general in application, it becomes almost a mathematical theorem in the sense that the slightest imprecision, or the slightest dismissal of some insignificant fact, by the waving of the hand and talking fast, results in the ‘irrefutable’ proof that 1=0 and hence that I am the Pope. Every quantity needs to be clearly defined before we can even begin.

Before we can discuss whether a rabbit or a brick has higher ‘entropy’ we need to note that entropy is an ‘extensive’ variable; that is, a single number defines the entropy of an entire system. That number has definite units (usually, joules per kelvin). Two rabbits have nominally twice as much entropy as one rabbit and two bricks have twice as much as one brick.

Even before we can discuss the entropy of a system, we need to define what the system is and, in particular, the nature of its boundary. The boundary may be physical or it may just be a line (more properly a surface) that we may choose to draw to define our ‘system’. A system is ‘isolated’ if its boundary is fixed and is not crossed by matter, heat, or electromagnetic or gravitational fields.

On this basis, it is not possible to describe the Universe as an isolated system. One cannot even begin to draw such a boundary between the Universe and all that surrounds it.

Thermodynamics is currently best explained in terms of quantum mechanics, earlier, by Boltzmann for example, it was in terms of ‘atoms’ even when it was not clear what this meant or even if atoms actually existed, earlier still, by Clausius for example, no attempt was made to consider what was ‘inside’ at all. In fact ‘classical’ thermodynamics takes the same attitude even today.

It is important to have a sense of scale. The Universe is very big (in the sense of its expansion it could be said that it goes on for ever). It is also very far from equilibrium. The Sun and other stars are very hot, the darkness of space is very cold. Heat flows from the Sun to the Earth but heat flows from the Earth out into space. The temperature of the Earth remains on average reasonably constant. Thus the entropy of the Earth remains reasonably constant. This process has taken place for many years and will continue for many years to come.

On the other hand the quantum world is very small. This is where entropy changes actually take place, not in shuffling cards or the spontaneous untidying of rooms. The Second Law is widely applicable therefore it must concern the properties of things that are universal, not things that are specific.

I am very wary of correlations between ‘entropy’ and ‘information’ or ‘disorder’. Again, very precise definitions are needed. If entropy is defined by a numeric quantity with appropriate units, then ‘information’ or ‘disorder’ must likewise be defined by a numeric quantity with appropriate units. ‘Malarkeys per minute’ will just not hack it. It is not even clear whether Maxwell’s demon should be considered to be inside or outside of the system. Looking at lists on paper is thinking on completely the wrong scale -- rubbing out the writing or just shutting your eyes makes as little difference as taking a 747 to pieces as far as entropy is concerned. It is easy to see that to destroy a bit of information in a system it is necessary to provide enough random input to the system to overcome the potential barrier that was stopping that bit of information from being lost and thus leave the bit in an indeterminate state. Imagine a random input to a disk head that is just enough to overcome the magnetism of the recording and leaving the magnetisation in an unknown state. We are dealing with real physical quantities here but for the purpose of determining the smallest possible entropy increase we need to consider physical processes that are theoretically possible but are on too small a scale to be realised with current technology.

*http://www.icr.org/article/86/

alanuk @14: From Morris’ paper;

Apparently he didn’t believe in nucleosynthesis either*. I suppose it’s easier to shovel entropy bafflegab.

*Never mind the phase transitions since the early universe which resulted in the complicated, but much colder, mess we have today.

A few thoughts:

1) A decrease in information doesn’t always mean an increase in entropy. Consider this paragraph. If you scramble the order of its characters you will no longer have information but the entropy will be the same.

2) Consider the grocery list. Initially it did not exist. It was created by intelligence. So we must consider the relationship between entropy, order, information AND intelligence.

3) There are no closed systems yet we readily observe entropy and adding energy to a system doesn’t magically make it organized. If that were true I could just leave my car out in the sun for a while to fix a flat tire — or maybe just add gas. Even better I could constantly supply my body with food energy to cure disease and live forever.

Sometimes it seems like entropy is reversed and matter does become magically more organized. For example, if I scratch my arm it will eventually reorganize itself and heal through a series of programmed chemical reactions. Energy is required for this to happen but also information that specifies the programmed chemical reactions. This information is in our DNA. Overtime the information in our DNA is corrupted. Because of this we age and eventually die. Entropy overcomes information.

Similarly if you plant a seed in the ground and add water and sunlight, the surrounding molecules will organize into a plant through a series of programmed chemical reactions specified by the plant’s DNA.

But where does information come from? It comes from intelligence.