Back in the day, when I was writing papers, it was a grueling, demanding process. I’d spend hours in the darkroom, trying to develop perfect exposures of all the images, and that was after weeks to months with my eyes locked to the microscope. Even worse was the writing; in those days we’d go through the paper word by word, checking every line for typos. We knew that once we submitted it, the reviewers would shred it and the gimlet-eyed editors would scrutinize it carefully before permitting our work to be typeset in the precious pages of their holy journal. It was serious work.

Nowadays, you just tell the computer to write the paper for you and say, fuck it.

That’s the message I get from this paper, Bridging the gap: explainable ai for autism diagnosis and parental support with TabPFNMix and SHAP, which was published in one of the Nature Publishing Group’s lesser journals, Nature Scientific Reports, an open-access outlet. Now I can’t follow the technical details because it’s so far outside my field, but it does declare right there in the title that they have an AI tool for autism diagnosis that is explainable, which implies to me that it generates diagnoses that would be comprehensible to families, right? This claim is also emphasized in the abstract, before it descends into jargon.

Autism Spectrum Disorder (ASD) is a complex neurodevelopmental condition that affects a growing number of individuals worldwide. Despite extensive research, the underlying causes of ASD remain largely unknown, with genetic predisposition, parental history, and environmental influences identified as potential risk factors. Diagnosing ASD remains challenging due to its highly variable presentation and overlap with other neurodevelopmental disorders. Early and accurate diagnosis is crucial for timely intervention, which can significantly improve developmental outcomes and parental support. This work presents a novel artificial intelligence (AI) and explainable AI (XAI)-based framework to enhance ASD diagnosis and provide interpretable insights for medical professionals and caregivers…

Great. That sounds like a worthy goal. I’d support that.

Deep in the paper, it explains that…

Keyes et al. critically examined the ethical implications of AI in autism diagnosis, emphasizing the dangers of dehumanizing narratives and the lack of attention to discursive harms in conventional AI ethics. They argued that AI systems must be transparent and interpretable to avoid perpetuating harmful stereotypes and to build trust among clinicians and caregivers.

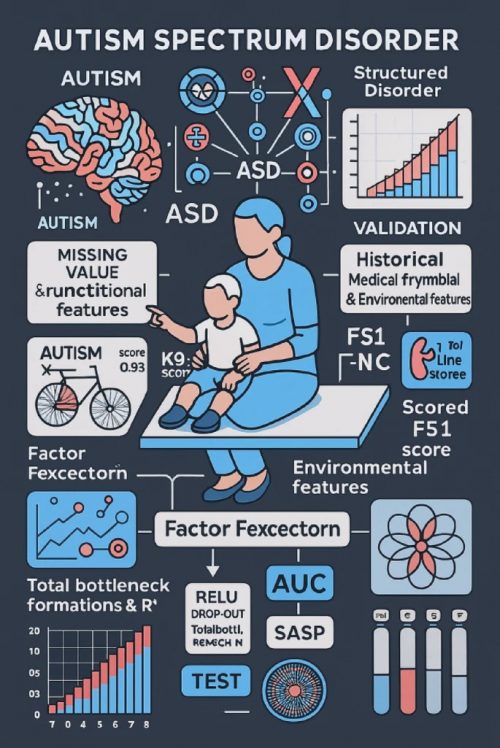

So why is this Figure 1, the overall summary of the paper?

You’d think someone, somewhere in the review pipeline, would have noticed that “runctitional,” “frymbiai,” and “Fexcectorn” aren’t even English words, that the charts are meaningless and unlabeled, that there is a multicolored brain floating at the top left, and that “AUTISM” is illustrated with a bicycle, for some reason? I can’t imagine handing this “explanatory” illustration to a caregiver and seeing the light of comprehension lighting up their eyes, which don’t exist in the faceless figure in the diagram, and perhaps she is more concerned with how her lower limbs have punched through the examining table.

This paper was presumably reviewed. The journal does have instructions for reviewers. There are rules about how reviewers can use AI tools.

Peer reviewers play a vital role in scientific publishing. Their expert evaluations and recommendations guide editors in their decisions and ensure that published research is valid, rigorous, and credible. Editors select peer reviewers primarily because of their in-depth knowledge of the subject matter or methods of the work they are asked to evaluate. This expertise is invaluable and irreplaceable. Peer reviewers are accountable for the accuracy and views expressed in their reports, and the peer review process operates on a principle of mutual trust between authors, reviewers and editors. Despite rapid progress, generative AI tools have considerable limitations: they can lack up-to-date knowledge and may produce nonsensical, biased or false information. Manuscripts may also include sensitive or proprietary information that should not be shared outside the peer review process. For these reasons we ask that, while Springer Nature explores providing our peer reviewers with access to safe AI tools, peer reviewers do not upload manuscripts into generative AI tools.

If any part of the evaluation of the claims made in the manuscript was in any way supported by an AI tool, we ask peer reviewers to declare the use of such tools transparently in the peer review report.

Clearly, those rules don’t apply to authors.

Also, unstated is the overall principle to be used by reviewers: just say, “aww, fuck it” and rubber-stamp your approval.

The first sentence is arguably wrong.

It’s thought that the actual accurence of ASDs is about the same as it always was.

What has changed is the frequency of diagnosis. We are much more aware of Austism Spectrum Disorders and much more aware that it is a spectrum, from Asperger like conditions to the more obvious autism like conditions.

The second sentence is also wrong.

We’ve known the causes of ASDs for decades now.

It’s mostly genetics with a high heritability of 80% or so and polygenic. It is also set during fetal development.

So, OK, the first and second sentences are wrong.

This is not a promising start for a paper.

If Nature (the publisher) wants to retain any scrap of the prestige associated with Nature (the journal), I would expect this paper to be retracted immediately, various reforms to the review process announced, etc. Any sign of that?

As of now, this notice appears right before the paper:

This at least is more or less accurate.

Each case of an ASD seems to be different in detail.

“If you’ve seen one case of an ASD, you’ve seen one case of an ASD.”

This probably reflects the underlying polygenic causes of ASDs. It’s thought that somewhere between 200 and 1,000 genes are involved, all with small effects acting in various combinations.

I work with explainable AI professionally. I skimmed the paper, and I have some questions.

The study is based on a sample size of only 120 (!!). They claim that the sample is “wide” rather than deep, meaning there are a lot of attributes on each patient. But… what are they then? The SHAP analysis only shows a handful, and I didn’t see discussion of aggregation method.

One of the problems with SHAP is that in the general case it’s very slow. It’s O(N!) in the number of attributes, intractable for a “wide” dataset. There are more efficient ways to calculate SHAP for specific models, but I have no idea if there exists one for the particular neural network they’re using. It stood out to me that when discussing computational efficiency, they left SHAP out.

Yikes, what a figure… it looks like someone took some popular AI related terms (F1-score, AUC, feature extraction, functional features, ReLu, Dropout, validation, test) put them through a meat grinder and then threw them at a wall to see where they would stick.

Also the x-axis on the figure bottom left.

I believe “explainable AI” means that, as with a human diagnostician, the AI can give reasons why it came up with that answer instead of some other, as opposed to “It’s because of a bunch of weights in the neural network, that were caused by the training data, but damned if anyone can figure out specifically how all that contributed to the current output, which means there’s no rational way to argue with it”.

I fexcectorn my frymbiai and now it’s not runctitional.

I learned in a previous AI figure thing that in some journals the figures don’t get reviewed.

Which blew my goddamned mind.

Correction to #5, that should be O(2^N) not O(N!).

stevewatson: generally, explainable systems are prediction systems that we can explain why a certain output came out, and more generally, we can model the model — i.e. the model is a complicated approximation of reality, too complicated for humans. But we can make a simpler model that a human can understand, that approximates what the complicated model is going to do.

This is particularly useful for understanding the circumstances where the complicated model is going to fail. This is useful when using the model, so you know whether it’s trustworthy. It’s also useful when doing research to come up with promising avenues for improving the model.

The concept is particularly applied to neural networks because they seem completely opaque, but it applies to modelling generally.

What’s going on with the legs of the adult in that illustration?

There’s already an expression of concern on it. The reviewers and editors may have failed, but the community at large came through!

“Factor Fexcectorn?” That kinda sounds like a book one might find in a Medieval Church library, possibly next to “Malleus Malefactorum.” That phrase alone should have got the whole paper nixed; or at least held up pending LOTS of explanation from the “authors.”

So (#2 and 3) they’re waiting for further study before actually withdrawing it, because….what, are they checking the literature for other uses of the word “fexectorn?” Just in case?

I’m somewhat reminded of a fisherman I met long ago who caught the catfish swimming near a notoriously nasty neck of Lake Champlain we sometimes referred to as “Dioxin Bay.” He said it was fine to eat the fish if you cut the tumors out.

I’m sure the paper is fine and full of wisdom. There must be a pony under there! You might even call it runctitional!

Every time someone online tries to argue that this “AI” nonsense is useful and important, they should be spammed with this until they give up and go home. At best it’s a novelty toy, at worst a blight on society. I see no value in any of it.

More details: https://nobreakthroughs.substack.com/p/riding-the-autism-bicycle-to-retraction

Apparently the paper will be retracted very soon. But what happened to the review process and what changes they intend to make to it, if any, are still unclear.

Also, everyone is spelling “frynnnnblal” wrong.

According to ZeroGPT the introduction of the paper is entirely AI generated, and the bulk of the rest is also AI generated. I’m writing a development report right now for the synthesis of a drug. There will be zero AI generated text. This is just pathetic.

@7 stevewatson

@11 numerobis

Some years ago I found a paper that claimed that the weights in a feedback neural network are chaotic. Alter your training data in a seemingly trivial way and you get a completely different set of weights. So how can a system based such a network be “explainable”? An “explanation” (the weights) should not be strongly dependent on simple changes to your data set. Otherwise it feels to me very much like overfitting a polynomial: you end up modelling the data and the noise.

Unfortunately I lost the paper and have not been able to find it again. Being only tangentially related to work, I did not copy it to my network backup drive, and did not bring a copy home.

Tempted to run a game of Paranoia and give the players one of these as their mission briefing.

If you are scientifically accurate and moral, that means you’re “autistic”.

Really healthy people go to parties with lots of alcohol and jump around for hours without any noteworthy intellectual activity, are “societally accepted” because they bully others into submission or violate their human rights and believe in the “one true religion” (whichever that may be).

It isn’t only the journal that shouldn’t be trusted. Authors who demonstrate such a complete disregard for the integrity of science should be blacklisted, have their degrees revoked and be kicked out of whatever institution they represent.

‘fryrmbial” looks like a Charles Dodgson invented word, and the editorial process was whiffling and galumphing through this figure which is why the paper must be retracted. One can only galumph or whiffle, not both, when editing ASD research…

cartomancer: “Every time someone in the town square tries to argue that this ‘electricity’ nonsense is useful and important, they should be shouted down until they give up and go home. At best it’s a parlor trick with glowing bulbs, at worst a dangerous blight on society. I see no value in any of it.”

A propeller-driven bicycle at that.

… in conclusion: generative AIs are not allowed to use any texts by Lewis Carrol in scientific analyses, but any texts by Charles Dodgson can override this rule as long as you use the latter in all citations.

’Twas brillig, and the slithy toves

Did gyre and gimble in the wabe:

All mimsy were the borogoves,

And the mome raths outgrabe.

– starting verse from Jabberwocky by Charles Dodgson

@robert79

The y axis isn’t much better.

Also, don’t trust anything from The Beaverton

‘Made-up quote’ in Canadian satire site The Beaverton fools Time Magazine

Laugh at them, but don’t trust them.

Also, obviously, don’t trust anything from Time magazine.

Bad attempted analogy, Reginald.

That site was explicitly a parody, whereas the featured paper’s publisher is not.

One expects untruths in a parody site, but not on “one of the Nature Publishing Group’s lesser journals, Nature Scientific Reports, an open-access outlet”.

To rephrase it in case that was obscure: One can actually trust anything from The Beaverton to be satire, but the point is that one cannot trust anything from “one of the Nature Publishing Group’s lesser journals, Nature Scientific Reports, an open-access outlet” due to this existence proof.

raven@1–

‘Heritability’ needs to be retired. It is not, despite the name, a measure of how genetically-determined a phenotype is.

(Feldman and Ramachandran 2018)

Siggy@5–

Re: small sample sizes and poor analysis. When I see a paper with such a lack of standards in the text and figures, I find myself wondering if one should even accept the data.

This autism stuff is exhausting. Anyone who has done any reading on it and has a family history of it can clearly see what constitutes an ‘Autism’ diagnosis in about 80-90% of cases these days. It is essentially an inherited, common, if minority, personality type that has become pathologized, leading to some 2-5% of people being considered ‘sick’ or ‘broken’. There are several things this shit me about this:

1) It distracts from serve cases of autism and dilutes attention from these people with a serious disability and can not function independently in society. Respectful research should focus here.

2) ‘Mild’ cases are only considered a problem because of a society that expects typicality or a society that still cheers for the football captain to beat the living shit out of the weirdo for being different. 80-90% of autistics are not broken, society is and has always been.

3) Autism in these mild cases is just neurodiversity, present in a minority of people, yet who have probably contributed far more than their fair share to art and science. Get the fuck off our backs.

4) Looking for a cause for Autism in these mild cases is like looking for a cause for why some children prefer nature while others like reading. It’s an interesting question, but if your motive is to prevent it, go fuck your self.

5) We should focus our time diagnosing and curing malignant narcissism, also likely a genetic personality type that is too often encourage rather than punished or corrected by society (depending on the local culture, I guess).

@12 submoron

“What’s going on with the legs of the adult in that illustration?”

That’s called a runctitional feature. I think everyone should start referring to weird AI stuff, like six fingered hands with thumbs orthogonal to the palms, as runctitional features. It would be so very fexcectorn, and totalbottl to the max.

So (#2 and 3) they’re waiting for further study before actually withdrawing it, because….what, are they checking the literature for other uses of the word “fexectorn?” Just in case?

“I saw that word in some other paper before! I could swear I saw it! I just don’t remember where or when! No, really, I remember it from somewhere…”

I’ve noticed a lot of text in AI “art” that’s either gibberish, or not even actual letters in any alphabet. Which makes me wonder what the hell’s going on here — things like ChatGPT work with nothing but WORDS, so why can’t they come up with actual coherent words in images they generate?

Raging bee, I think the basis for your wonder is that you confuse LLMs with image generators: LLMs work with words, but image models treat text as visual patterns, so they produce gibberish as well as coherent words. Both AI, but not the same ‘AI’.

LLMs also treat words as patterns — they’re sequences of letters. The LLM no more understands the meaning of the patterns than does the image generator.

Yes, but visual patterns are raster-based on those generators (vector-based are on the pipeline) and that is just a grid of pixels to change. LLMs use high dimensional vector databases for relationships.

Neither understands anything, but they are not equivalent. That’s why the pseudotext.

I was directly addressing ‘things like ChatGPT work with nothing but WORDS, so why can’t they come up with actual coherent words in images they generate?’ and noting they are different architectures.

jimf @34: Like, totally, dude, you’re brilling with fire!

@Recursive Rabbit #21:

“The AI is your friend.”

A search on “autism bicycle” turns up a surprising (to me, anyway) number of sites, with pictures, on teaching your autistic child to ride a bike. Having that in the data may be the source of that particular bit of the graphic.

@PZ:

One can also argue that humans don’t know the meaning of the words either and then we get to the questions such as “is my blue the same as your blue?” which gets us close to some nonsensical discussions.

I think it’s way past the point where you could argue that LLms don’t understand the meaning of the words because I don’t think you can make a reasonable test for assessing understanding of the words in which humans can pass and where AI models fail. For example, LLMs “understand” the meaning of the words “big” and “small”. You can create list of questions assessing this understanding and I am sure they will not score worse than humans.

To clarify some of this discussion, I asked Google AI: I quote verbatim >>>

The term “runctitional” is a nonsensical, likely AI-generated, misspelling or corruption of the word “functional”.

It gained recent attention in late November 2025 because it appeared as part of a “missing value” label in an AI-generated diagram within a research paper published in a Nature journal, Scientific Reports.

<<< So that’s that cleared up. Now to pin down frymblal, but using ‘m’ with three humps instead of two…[needs a new keyborad for that search]

@nomadiq #33

Could not agree more. Both that it feels like we’ve reached the point of pathologizing personality quirks, and that the ones we choose to focus on seem to be the ones that fail to produce good workers and not, say, the ones that produce societally dangerous self-interested assholes.

@lotharloo #42

I appreciate that you’ve already put scare quotes around “understand” but.. I mean… do they? Like, I, personally understand what “bigger than a breadbox” means on a real, physical level. I know about how many breadbox-sized objects I could carry, assuming they weren’t too heavy. I even have a rough idea of how heavy something like that should be, what weight ranges I’d consider surprising if I picked it up, and what that would feel like. I would say I understand this in a fairly concrete way that’s nigh universal to every human being with a concept of breadboxes and the size of them, and which an unembodied AI, at least, cannot possibly ever reach. LLMs have associations between tokenized words, and that’s it. They don’t have a context outside of language, they don’t have experiences, most don’t even have long-term memory. I don’t think artificial minds are necessarily impossible, but LLMs don’t got em. I think they’re ultimately a Chinese Room – rearranging associated concepts, translating language to a tangled knot of vectors and back again with great accuracy, but not doing anything that merits the term “understanding”

True.

The autism community has pointed this out over and over again for many years.

“We aren’t sick or disordered, we are neurodiverse.”

People on the ASD spectrum differ so much in every way that you can’t make any generalizations.

However, it is commonly noted that a lot of scientists could be considered to have Aspergers characteristics and I certainly know a few of those.

My old friend struggles with language and writing and…also has a Ph.D. in theoretical chemistry from one of the world’s better universities.

The other human conditions that are also frequently medicalized and pathologized are;

.1. Intersexes.

They’ve come out against gender conforming medical care when it is involuntary.

.2. Trans people.

The bigots are always happy to call them sick.

keinsignal, I reckon AI shows “understanding” because language itself encodes shared human experience and their training captures those encodings, and so the models reproduce them.

Metaphorically, language itself functions as the Chinese Room.

What looks like rearrangement is the embedded logic of discourse itself.

(cf. Wittgenstein’s language games)

[to make it clear, that is conjecture and I can in no way sustain it, it’s only how it seems to me]

@stevewatson

In my experience a lot of autistic people like bicycles, although my sample might be biased as I’m an autistic person who likes bicycles

(They’re quieter than cars, there are interesting stimming aspects to using them, and you can get very nerdy about them)

I see the infographic as AI slop, unless I’m told otherwise.

@dangerousbeans, Iris Murdoch wrote in her novel The Red and the Green: “The bicycle is the most civilized conveyance known to man. Other forms of transport grow daily more nightmarish. Only the bicycle remains pure in heart.”

Was Iris Murdoch autistic? Perhaps so: https://irismurdochsociety.org.uk/2024/04/29/autism/

Never got beyond training wheels. I lacked confidence in my ability to balance. Reduced motor function is one of my symptoms.

@keinsignal:

You can take the philosophical route to try and come up with some argument that somehow tries to argue about the “uniqueness” of humans and so on but I think it’s futile. Here is a question for you: can a blind person understand what the words “big” or “small” mean? I don’t think you want to seriously argue for that position.

lotharloo @53:

Since most blind people can touch things (like breadboxes, other people, trees, etc), I’ll go out on a limb and say “definitely”.

Old blog I used to go to called Skeptico had an article all about how you could experimentally prove photography to a blind person. A lot of people simply lack the imagination. And sometimes, I suspect there’s a level of ableism tangled up in there.

[OT]

Recursive Rabbit, it was actually about how psychics don’t have much success rate; the idea is that a blind person could be given a camera to repeatedly photograph the fingers of their other hand (1..5) in secret and then show the photograph to sighted people for a 100% success rate, in contrast to that of psychics.

Alas, not archived by the Wayback machine, original is gone: //skeptico.blogs.com/skeptico/2005/05/how_do_you_prov.html

@Rob Grigjanis:

Can a blind person understand colors?

lotharloo: So you’ve moved on from size (without responding) to colour. Does a sighted but colour-blind person understand colour? Does a person who hasn’t learned calculus understand Maxwell’s equations? We could go on forever.

[meta + OT]

@Rob Grigjanis:

I moved on because it doesn’t change my answer but it seems like you think there is something magic about sensory input so therefore this question is more direct and I wonder if it changes your answer. Can a blind person understand color?

[lotharloo, you have to add ‘from birth’ for your query, because otherwise, ‘yes’ is the answer]

Yes is always the answer. It doesn’t matter. There is nothing magical about sensory input. We can understand a lot of things that we cannot directly see, touch, smell, hear, or taste.

This is also why the “Chinese room” thought experiment is silly.

Nope. Thus my video, about people deaf from birth.

“This is also why the “Chinese room” thought experiment is silly.”

It’s no longer only a thought experiment; cf. LLMs.

That’s exactly what they do. The analogy’s appositeness is uncanny.

It truly is the Chinese Room, but instantiated.

It is sad to say but true. I know parents of a profoundly autistic son who might find that chart useful. They consider any coherence or clarity to be suspicious hallmarks of the science they reject–in this specially pleaded case–because, in their mind, it has been so wrong for so long. That they’re the wrong ones, or at least that they’re part of a mystery, is not comprehensible.

@John Morales:

The point of the thought experiment is to argue that AI can’t really understand anything and that humans are magic (the actual argument is a bit coy to argue this latter point but it’s basically what they argue).

‘The point of the thought experiment is to argue that AI can’t really understand anything and that humans are magic’

Not at all. Nothing there about humans being magic. That’s something you just introduced.

Again: LLMs follow rules without comprehension, generate outputs indistinguishable from genuine language, yet operate without intentionality. That perfectly matches the operative part of the system.

Wikipedia (https://en.wikipedia.org/wiki/Chinese_room#Chinese_room_thought_experiment): Searle argues that, without “understanding” (or “intentionality”), we cannot describe what the machine is doing as “thinking” and, since it does not think, it does not have a “mind” in the normal sense of the word. Therefore, he concludes that the strong AI hypothesis is false: a computer running a program that simulates a mind would not have a mind in the same sense that human beings have a mind.

Me, I reckon that conclusion seems hasty.

I would rephrase ‘would not have’ as ‘need not have’ to avoid such unwarranted certitude.

John Morales @68,

If their outputs were completely indistinguishable from genuine language, I don’t think we could conclude that they operate without intentionality. It’s the occasional hallucinations and whatnot that they produce which cause them to fail sufficiently lengthy Turing tests.

Likewise, the Chinese Room scenario assumes a system that appears to understand Chinese perfectly–which implies that you could ask it all sorts of questions about its desires and emotions and values and celebrity crushes and personal life and so forth, and it would return exactly the same answers as a particular real or hypothetical Chinese-speaking person. That’s far beyond what LLM’s can currently do, and if a system could do that I’d be happy to say that it possessed intentionality.

The entire point of the thought experiment is to argue that machines can never understand or have “consciousness” because they follow their programming blindly that just like in the thought experiment no amount of programming or data can overcome the philosophical hurdle of understanding, i.e., humans are magic.

Um, those hallucinations and what not are genuine language.

That they are wrong or fabricated doesn’t mean they are not language, or well-done at that.

For example, they’d not fuck up the plural vs. the possessive, such as ‘that LLM’s can currently do’.

—

lotharloo, again: Wikipedia (https://en.wikipedia.org/wiki/Chinese_room#Chinese_room_thought_experiment): Searle argues that, without “understanding” (or “intentionality”), we cannot describe what the machine is doing as “thinking” and, since it does not think, it does not have a “mind” in the normal sense of the word. Therefore, he concludes that the strong AI hypothesis is false: a computer running a program that simulates a mind would not have a mind in the same sense that human beings have a mind.

There is no philosophical hurdle. He has an unstated premise that a mind cannot possibly be like a LLM, but it a fact that he does not know what a mind actually is.

More to the point, at what point (heh) does a good enough simulation fool a perceiver?

Put it this way; if the engine improves so one really can’t tell, does that still mean it’s only an imitation of a mind, or is it actually (at least functionally) a mind?

Such are the issues.

Obs, we’re not there yet, but LLMs ain’t Elizas, either.

@John Morales:

Again, he argues that humans have “intentionality” and “mind”. Why? How? He does not explain so I fill it in because he thinks humans are magic.

You can read the actual paper. This is an excerpt from it.

“5. The Other Minds Reply (Yale). “How do you know that other people understand Chinese or anything else? Only by their behavior. Now the computer can pass the behavioral tests as well as they can (in principle), so if you are going to attribute cognition to other people you must in principle also attribute it to computers.”

This objection really is only worth a short reply. The problem in this discussion is not about how I know that other people have cognitive states, but rather what it is that I am attributing to them when I attribute cognitive states to them. The thrust of the argument is that it couldn’t be just computational processes and their output because the computational processes and their output can exist without the cognitive state. It is no answer to this argument to feign anesthesia. In “cognitive sciences” one presupposes the reality and knowability of the mental in the same way that in physical sciences one has to presuppose the reality and knowability of physical objects.”

In the real world, functional definitions rather than essences work best.

John Morales @71 & 74,

But surely the criterion here should be “genuine language that would be appropriate from a human respondent given previous user input,” no? Otherwise you could just write a four-line program that returned “I’m sorry, but I don’t feel like talking right now!” to any possible input, and that would also be genuine and well-done language. But I don’t think most of us would be arguing over whether that program possessed intentionality.

Of course I immediately ran off to Google to see if I could find any vindication, but the AI coldly confirmed, “You should not use an apostrophe to make most acronyms plural; instead, add an ‘-s’.” So clearly it does possess intentionality and is consciously colluding with you to make me look bad!

Still, my error itself supports my point. It’s not grammatical accuracy that determines whether an AI could pass a Turing test, because real people fuck up grammar all the time. The important thing for producing an impression of intentionality and/or understanding is the long-term flow of the conversation, e.g. my desperate attempt to defend my error after you pointed it out.

So a serious Chinese Room candidate could still make the occasional typo, but it would need to be able to respond in a psychologically realistic fashion to, say, the user making fun of it for that typo. And asking why it made that typo in the first place. And saying, “I know where you live and I sent leaflets mocking your typo to all your neighbors; whatcha gonna do about it?” Hell, at some point the Chinese Room might need to make more mistakes to simulate the effects of stress, exhaustion and humiliation from user harassment. Otherwise, that appearance of “perfect understanding” will fail at some point, because it won’t respond in exactly the way that a human would respond if they understood Chinese.

I agree entirely, but as you also said, LLMs aren’t functionally there yet. If they ever are, I will consider them (along with a successful Chinese Room) to possess intentionality and understanding.

lotharloo @73,

…yeah, whenever someone starts claiming that a point is “only worth a short reply” and invoking “presupposition,” I figure they’re already losing the argument. That’s basically equivalent to, “I don’t actually know how to refute your objection, so Imma just make it axiomatic that I’m right.” Which is why Christian apologetics resorts to that move so often.

The obvious response is, if we’re allowed to presuppose that other people possess mental properties, then why can’t we presuppose this of the Chinese Room as well? And as you say, the implied answer is pretty much “well, humans are magic.”

John Morales, #25

Electricity had many very obvious and important uses from the get-go. It could run motors. It could provide cheap light. It could be used for sending messages long distances. Electricity was obviously a powerful and important technology.

A.I. produces what? poor facsimiles of art, writing and video. It’s a high-speed crap machine. The world is full of mediocre art, writing and such, it doesn’t need a faster pipeline to spew out more mediocrity and worse. When it produces anything genuinely impressive, that isn’t just mashed-together stolen content, I may venture to reassess. At present I can’t see how it ever will.

cartomancer, here’s an old quip, misogyny-laden though it is:

(https://www.bartleby.com/lit-hub/familiar-quotations/4043-samuel-johnson-1709-1784-john-bartlett)

—

Prax, an excellent response. I think what you are looking for is true sapience, that is, a GAI.

As for lotharloo’s claim, well…

[I learned the parallel form technique from my sifu, truth machine]

5. The Other Flight Reply (Aviation). “How do you know that birds really fly or anything else? Only by their airborne behavior. Now the airplane can sustain lift and traverse the skies as well as they can (in principle), so if you are going to attribute flight to birds you must in principle also attribute it to airplanes.”

…

In theology one presupposes the reality and knowability of the spiritual in the same way that in physical sciences one has to presuppose the reality and knowability of physical objects.

PZ@37:

All these models are predicting tokens.

For an LLM, that token is, roughly speaking, a word; it then tries to build up a bunch of tokens to form a text that encodes a message. The meaning might be completely garbled, or worse it might be a very cogent-seeming hallucination, but it’ll be words.

For an image model that token is the RGB values of a pixel, and it’s trying to create an image that illustrates the message. So you get hallucinations expressed in image form. The meaning might be completely garbled but it’ll definitely be an image.

(Read “trying” in a purely mechanistic light of course.)