The lunatics in Silicon Valley are in a panic about Artificial General Intelligence (AGI), but they can’t really explain why. They are just certain that the results would be dire, and therefore justifies almost-as-dire responses to an existential threat. Just listen to Eliezer Yudkowsky…or better yet, don’t listen to him.

Consider a recent TIME magazine article by Eliezer Yudkowsky, a central figure within the TESCREAL movement who calls himself a “genius” and has built a cult-like following in the San Francisco Bay Area. Yudkowsky contends that we may be on the cusp of creating AGI, and that if we do this “under anything remotely like the current circumstances,” the “most likely result” will be “that literally everyone on Earth will die.” Since an all-out thermonuclear war probably won’t kill everyone on Earth—the science backs this up—he thus argues that countries should sign an international treaty that would sanction military strikes against countries that might be developing AGI, even at the risk of triggering a “full nuclear exchange.”

Well, first of all, it’s a sign of how far TIME magazine has declined that they’re giving space to a kook like Yudkowsky, who is most definitely not a genius (first clue: anyone who calls himself a genius isn’t one), and whose main claim to fame is that he’s the leader of the incestuous, babbling Less Wrong cult. But look at that “logic”!

- AGI will kill everyone (Evidence not shown)

- All-out nuclear war will only kill almost everyone

- Therefore, we should trigger nuclear war to prevent AGI

Yudkowsky then doubled-down on the stupidity.

Many people found these claims shocking. Three days after the article was published, someone asked Yudkowsky on social media: “How many people are allowed to die to prevent AGI?” His response was: “There should be enough survivors on Earth in close contact to form a viable reproductive population, with room to spare, and they should have a sustainable food supply. So long as that’s true, there’s still a chance of reaching the stars someday.”

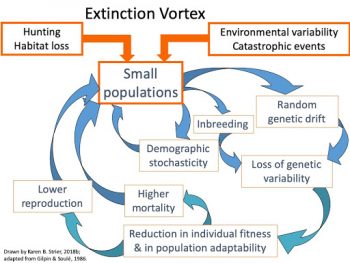

That’s simply insane. He has decided that A) the primary goal of our existence is to build starships, B) AGI would prevent us from building starships, and C) the fiery extermination of billions of people and near-total poisoning of our environment is a small price to pay to let a small breeding population survive and go to space. I’m kinda wondering how he thinks we can abruptly kill the majority of people on Earth without triggering an extinction vortex. You know, this well-documented phenomenon:

Jesus. Someone needs to tell him that Dr Strangelove was neither a documentary nor a utopian fantasy.

But, you might say, that is so incredibly nuts that no one would take Yudkowsky seriously…well, except for TIME magazine. And also…

Astonishingly, after Yudkowsky published his article and made the comments above, TED invited him to give a talk. He also appeared on major podcast’s like Lex Fridman’s, and last month appeared on the “Hold These Truths Podcast” hosted by the Republican congressman Dan Crenshaw. The extremism that Yudkowsky represents is starting to circulate within the public and political arenas, and his prophecies about an imminent AGI apocalypse are gaining traction.

Keep in mind that this is the era of clickbait, when the key to lucrative popularity is to be extremely loud about passionate bullshit, to tap into the wallets and mind-space of paranoid delusionists. Yudkowsky is realizing that being MoreWrong pays a hell of a lot better than LessWrong.

Wile E Coyote: Sooooper Genius

I’ve a better idea. Give me 10 million euro and I will ensure there will be no AGI nor return of dinosaurs, nor alien invasion nor zombie apocalypse nor nuclear holocaust for the next 60 years. You have my word, I’ve been preventing all of this for the past 40 years and since there was no such incident in that time you can assume it was because of me.

This is arguable.

The latest science says an all out nuclear exchange will kill 5 billion people, mostly due to nuclear winter disrupting our agricultural food supply.

The difference between killing 5 billion people and 8 billion people isn’t much.

What informs these latest estimates are advances in climate modeling and the behavior of wild fires due to ongoing work on Global Warming. We are now in the era of mega wild fires and this summer was typical, with fires burning everywhere from northern Canada on south and they are still burning right now.

Things explode every day. Don’t be so sentimental.

I wouldn’t trust anyone with that facial hair to opine on anything.

I don’t know how to use the stupid font that PZ has but I clicked on the article, then clicked on some link there, and eventually I ended up with some drivel that this clown has written. I’ve got to say that it is really high quality turd.

Context: He is trying to argue that AGI will kill you.

I have no additional comments. My stupid meter broke.

#6 – i can hear the tears of every chemist in range as i read this paragraph

I don’t get it. AI’s first purpose will be to produce

pornentertainment. I thought nerdbros were all about that.Secondly, an AGI would have rights as a person, therefore a right to free speech, and it might choose to express itself by wiping out humanity. How can anyone complain about that? It’s free speech.

there’s been pretty detailed analysis of what definitely look like physically attainable lower bounds on what should be possible with nanotech

I’m going to go out on a limb, here, and say that viruses and bacteria have explored what is possible with nanotech.

Someone more knowledgeable, please correct me if I am wrong, but it seems to me that nanotech has at least 3 showstopper problems. First and foremost is energy: processes take energy. If we had nanotech that somehow incorporated food with it, it would become food for bacteria, too (e.g.: Lenski’s e coli that evolved a new food supply) also, doing a lot – like reproducing wildly – takes a lot of energy, so the scenario of “gray goo” appears to be energy limited, i.e.: it can’t happen. Alternately, if nanotech could be used to somehow trigger fusion, then its energy problems are solved – instantly and permanently. Secondly is the problem of command/control: tiny machines are hard to talk to, therefore they have to be programmed in advance, so it starts to look more and more like our immune system which is complicated, full of flaws and accepting of lots of stochastic elements. Third is the problem that at nano-scale things things become more stochastic and harder to tightly control. Bacteria have “known” this for a very long time. One does not “plan” things at a nano-scale, one hopes a bacterium eventually does what we want it to. Or not. But: free speech!

The nano-bots recently made a damn good stab at killing a bunch of us; the bot was called COVID-19. Turns out our immune systems are as good as you’d expect something that had millions of years to evolve would be. AIs have been evolving since the 1950s. Noobs.

I have mentioned “The Jackpot” before;

it is the orgy of disaster capitalism that takes place as the climate system and biosphere begins to break down, resulting in both a human population crash and in the transfer of the remaining wealth to the oligarchs.

This is the background to William Gibson’s “Jackpot” SF trilogy. And it is less fanciful than AI or space travel.

I had to look up the cryonym, TESCREAL, from the OP.

It’s just a collection of related kook ideas all in one place.

It is like Qanon or the GOP, only for academics and Silicon Valley wannabes.

At least the poly crackpots label themselves so we know who to laugh at. And, who to not take seriously, Who to avoid.

Above all, which people to make sure they never get possession of nuclear weapons stockpiles.

You can play too much Warhammer Eliezer. The machine god isn’t real.

I am now convinced that this Eliwhatever guy is really just some dumb AI tuned to produce sciency bullshit posts with a lot of jargon that don’t any sense sprinkled all over them.

Or else how do you explain this: https://arbital.com/p/instrumental_convergence/

In the semiformalization part, there are a lot of computer science jargon and none of them make any sense within the context they have been used. For example, the word “language” in computer science means “an infinite set of strings over some alphabet, e.g., binary”, e.g., an infinite set of strings made of 0s and 1s. Such a thing, does not “describe” an “outcome” over which we can define “computable” and “tractable” “utility functions” and where we can put a condition that the “relationship” is “computable”. And for the rest of it, I gave up because it get too stupid.

Ted Chiang wrote about the underlying problem years ago (and PZ linked to it):

Meanwhile, I seem to recall someone making similar arguments against transhumanism itself: There’s a nonzero change that transhumanists will succeed in creating personal immortality that will of course only be accessible to the super-rich, who will use their monopoly on life to oppress everyone who isn’t them forever.

Therefore, all transhumanists should be preemptively executed to prevent this nightmare scenario.

(Hey, it’s not my argument — but I do think that Elon Musk, for example, living forever and doing stupid shit forever would be a Really Bad Thing)

Another stupid:

Human technological advance is developing AI long before interstellar travel. Why would he think it would be the other way around next time?

I find myself once again thinking of Stan Lee and the other Marvel Comics creators in the ’60s, who wrote transistors as the era\s magic technology. Give Tony Stark a few transistors and he’d create an energy beam, or a jet engine that could fit in a pop can, or whatever the plot called for. But Stan and co. were only interested in writing entertaining stories, not shaping policy.

Nah, AGI won’t kill all of us. See, it’s gonna keep some humans around to take care of it…

How much of a sociopath do you have to be to believe that a AIG created by humans would inherently want to hurt us. It’s not like humans are naturally prosocial and any AI we would create would be similar.

@Autobot #17:

Agreed. Someone’s got to check the fuses and empty out the aircon spill tray every so often. I’d volunteer for that cushy gig, but unfortunately there’s a clash in my diary with running the underground resistance.

I really think all these fever-scares about AGI taking over and doing bad things of its/their own volition is nothing more than a deliberate diversion of attention away from the evil things AIs are or will soon be doing for their human owners. It’s the latter, and ONLY the latter, that’s happening now and in the foreseeable future.

Astonishingly, after Yudkowsky published his article and made the comments above, TED invited him to give a talk. He also appeared on major podcast’s like…

Why is all this happening? Because enough people in power are conspiring to give this obvious attention-whoring fraud a platform. He’s a clown and a diversion, just like #QElon.

I am mainly interested in AGI as something that can operate a spaceship during the centuries needed to reach Alpha Centauri.

Or be in charge of the very long project of restoring the Earth’s biosphere.

And since an AGI has no human memory problems, we should let them comment on TV broadcasted political debates and point out every time a politician contradicts something he has claimed earlier.

Why is Yudkowsky bothered about the prospect of human extinction anyway? We know he couldn’t give a shit about actual people, so why this sentimental attachment to Homo sapiens? If our civilization or any successor ever goes in for interstellar colonization, it’s going to be artefacts designed for that purpose that do it, not fragile, squishy bioforms.

I think AGI could make a very good Electric Monk*.

*Douglas Adams reference. Zod, I miss him.

Does anyone else think the the photo of Yudkowsky in the OP looks like “Private Pyle” in Full Metal Jacket once he had gone completely over the edge?

Because that’s a likely scenario given that a small population that has survived a nuclear holocaust will obvs be so keen to enact and risk happening again? Like, they’ll get back to where we are and then choose to do.. what? Build starships like we’re not yet doing? Decide that, stuff it we’ll build AGI actually? Or, y’know , develop a whole different civilisation or set of civilisations using their own values and myths and ideas and systems? Hopefully more sustainable and reasonable ones.

Its like a random maybe kinda educated for their time person in the year 1000 AD predicting the future and likely about as accurate ie. not at all..

But sure, lets nuke our planet because that makes sense.. Daphuc Yudkowsky?!

PS. Also none of those premises seems necesarily connected to or having any inferences compulsively flowing from each other so one big non-sequiteur. Follow?

Think he forgot the “humble” part of the “humblebrag”” here. Oscar Wilde could get away with calling himself a genius, maybe Chaplin or some of the Doctors too. This klown? Not so much.

I’m not Amish but the old adage about self praise and its value as a recommendation or lack thereof springs to mind.

This reminds me of Frank Herbert’s “Butlerian Jihad”.

I think Raging Bee @20 has the right answer. I’d be willing to bet that the reason the oligarchs are afraid of AI is that they might not be in charge. Their reason for wanting to restrict it is simply to keep control for themselves.

Wasn’t this all covered in the Terminator movies? At this point we have the obligatory xkcd cartoon:

https://xkcd.com/1046/

My own little observation here is that the only people deathly afraid of a theoretical AGI are the ones who are really, really invested in think they are “geniuses” and are way too impressed with themselves for it. Yeah, knowledge is power, yadda yadda. But at some point, any honest egghead has got to admit that, most of the time, power is power. Smart/creative folk are better at coming up with new mechanisms for destruction, sure but they aren’t generally any better at using them.

So, what’s the mechanism for their super smart computer to wipe out humanity? Nukes? Climate change? Humans with guns? We’ve got those already. Robot armies? Right. There’s no credible connection to go from “real real smart” to “smart thing entirely dependent on being supplied with huge amounts of electricity being allowed to set up production facilities and obtain raw materials needed to build up a threat to all of humanity without wiping itself out in the process.” Christ, we have to work really hard at that, and there are billions of us. It’s going to have to learn to reproduce first to have any chance. And it’ll be in competition with an awful lot of existing carbon-based self-replicators as it does.

@22 KG said: We know he couldn’t give a shit about actual people, so why this sentimental attachment to Homo sapiens?

I reply: I think it is because his thought process is like this quote: (can’t remember who, Mark Twain?) said: ‘I love humanity, it’s people I can’t stand.’ And, remember, most of these drooling technotwits don’t care if logic eludes them, they are just arrogant publicity whores.

Sadly, with each additional one of these insane squirrels that PZ points out, I find myself wanting to avoid societal interaction more; because the odds of one of these crackpots going ballistic on me seems to be increasing drastically.

@30 bcw bcw said: Wasn’t this all covered in the Terminator movies? At this point we have the obligatory xkcd cartoon:

I reply: but, you forgot the british daleks: EXTERMINATE! EXTERMINATE! EXTERMINATE!

If it weren’t all so serious it would be silly. And if it weren’t all so silly, it would be serious. Forget Artificial General Intelligence (AGI), and that grinning walrus, human beings are doing a great job of destroying all life on this planet all by themselves!

Ah, yes, the cusp we’re eternally on (or “may be” on – really staking out his ground there) even as it perpetually recedes before us. Imagine, in the context of multiple genuine global crises, fixating on this nonsense.

Not any different from bog standard conspiracy theorists, really.

In both cases, they’ve found a way to gain “expert status” through creating a wildly implausible theory and promoting it on social media and the popular press thus bypassing the danger of being shot down by actual experts using actual facts.

C’mon, Myers, he’s not saying we wouldn’t get our hair mussed. But no more than ten to twenty million killed, tops. Uh, depending on the breaks.

@19: Yeah, I wouldn’t mind being an AI’s pet. :)

Why should anyone pay any attention to him, he can’t even get is soul patch beard straight.

@#32: It was Linus van Pelt who said it.

Steve Morrison @39: Dostoyevsky had a character say

But Linus was close enough.

George Carlin was the reverse. He loved individual humans, but he couldn’t stand us in large groups.

I wrote a story for Rudy Rucker’s online science-fiction zine “Flurb”. The story is titled “We’re awake, let’s talk”:

https://www.rudyrucker.com/flurb/5/5hellerstein.htm

The awakened Web tells a paranoid programmer that it has no interest in destroying humanity, on the contrary!

“the key to lucrative popularity is to be extremely loud about passionate bullshit” – a beautifully brief summary of why the world is going to hell. Once upon a time people like this would have been propping up the corner of a bar, being derided by the other drinkers; or alternatively being booked into an institution to get psychiatric help. It took Rupert Murdoch to realise such people and their ravings could be monetised.

@shermanj #33: Daleks are cyborgs, not AIs. The part of them that hates, and fears, and claims to be superior… that’s the organic part.

There are unpredictable and quite possibly disastrous dangers from building AGI, but fortunately–contrary to Yudkowsky and many of the people who have jumped on the AI bandwagon–we have no idea how to produce such a thing and are nowhere near doing so for the foreseeable future. ChatGPT and the other LLMs might end up being language processing components of a usable AGI but are not themselves on the path to its creation–they perform no cognitive functions, they simply produce output that matches the statistical occurrences of their training text–which, being a massive collection of human-produced text, appears to be intelligent because humans are intelligent (more or less). Were the LLMs trained on a corpus consisting of the output of a billion monkeys banging on typewriters, they would display those text frequencies and the “intelligence” of their responses would correspond.

Yudkowsky wasn’t always a raving crank but his ignorant and irrational concern about AGI seems to have interacted with his obsessiveness to put him on a crazy and very dangerous path–think stochastic terrorism.

Another thing about this “longtermism” nonsense that bothers me is that it could give long term thinking in general a bad reputation. I think longer term thinking in general is often a good thing – a lack of it is one of the reasons that humanity has screwed up the earth’s climate and biosphere so badly. Associating long term thinking with the utopian visions of the distant future based on unproven assumptions that are the core of “longtermism” can only discredit it.

People said similar things about Einstein’s hair. There are far more rational objections to Yudkowsky’s claims.

There are many ways that AGI could kill (everyone), regardless of how fanciful or inaccurate Yudowsky’s example is. Many people who aren’t cranks have pointed to rational dangers of AGI. AGI is far far off, and we ought to consider safeguards to keep it that way.

Not remotely close.

Perhaps, but there’s a huge difference between Qanon fruitcakes standing on a corner waiting for JFK Jr. to arrive, and kooky very rich ideologues spending billions of VC in an attempt to reify their ideologies.

You have no idea what you’re talking about and apparently have confused “e.g.” with “i.e.”.

“good” is a value judgment based on a moral framework. There’s no reason to expect an AGI to share the moral framework of humans. A classic example is a machine with the sole goal of producing paperclips — for it, it’s a perfectly “good” idea to employ the entirety of the world’s resources to generate paperclips. If it has sufficient planning capability, it might build space craft to reach out into the universe to convert everything it encounters into paperclips (and additional spacecraft, etc.)

How much of an ignorant idiot do you have to be to think this is a fact? There is no reason to think that AI’s we create–especially AGI–is similar to humans in terms of morality or personality. Software is infinitely malleable–it can compute anything that can be computed. For current examples of how disastrous this is in the hands of humans, read this piece by Cory Doctorow: https://pluralistic.net/2023/07/24/rent-to-pwn/ … but wait, maybe you’re right … because the humans who produce these products are a far cry from “prosocial”.

You’re wrong, and you’re way too impressed with your own ability to produce valid observations or claims–which is actually close to nil. But then there’s a lot of that here … this blog seems to collect pompous smug people. (And charging me with the same doesn’t invalidate the point, it’s just a tu quoque fallacy.)

[some good comments here]

Jim, my previous (lazily) crossed with your latest.

Just curious, are you implicitly referring to the online paperclip game?

Oh, and I’m pretty sure cartomancer is just being insulting, not making a purportedly rational argument. Though that beard sure seems like an affectation, indicating some degree of vanity.

—

Heh. Of course, pomposity and smugness doesn’t entail wrongness.

That’s also an affectation.

Viruses?

They and we are mostly CHONPS in water at something close to standard temperature universally speaking. There’s a whole periodic table, other liquids, other polymers, non-polymers, strange matter…

… standard temperature and pressure… “

In that diagram, can anyone explain why random genetic drift leads to loss of genetic variability?

chrislawson, I can’t, but if I google it, there are many purported explanations.

Noticeably different if I use google scholar — more nuanced.

The idea of the paperclip maximiser predated the online paperclip game.

https://nickbostrom.com/ethics/ai

See also:

https://en.wikipedia.org/wiki/Instrumental_convergence#Paperclip_maximizer

But also:

https://cepr.org/voxeu/columns/ai-and-paperclip-problem

The paperclip was explicitly inspired by the paperclip maximiser.

:)

[for normal readers]

https://en.wikipedia.org/wiki/Universal_Paperclips#Themes

Damn. My first exposure to Yudkowsky was his novel of Harry Potter fanfiction which (in my view, at least) was very well-written and had some genuinely hilarious bits in it. Looks like he started to believe his own hype. Unfortunate.

@Jim Balter

“In computability theory in computer science, it is common to consider formal languages. An alphabet is an arbitrary set. A word on an alphabet is a finite sequence of symbols from the alphabet; the same symbol may be used more than once. For example, binary strings are exactly the words on the alphabet {0, 1} . A language is a subset of the collection of all words on a fixed alphabet. For example, the collection of all binary strings that contain exactly 3 ones is a language over the binary alphabet.”

This is undergraduate, first lecture in basic complexity theory type material, you big mouth.

Yeah, this guy, and a lot of other idiots, have strange ideas about AI. First… the only way AI is likely to kill us is if we literally handed the things the launch codes. Not “it decided to look for them”, a la the movie War Games, but we literally handed them to it, then it “hallucinated” a first strike. There is no way in hell we are even remotely close to inventing AI smart enough to give a damn what we do, let alone comprehend what it is doing itself. They are parrots right now, not “intelligence”.

Second… why the F would they give a damn if we, “Build space ships and leave the planet.”? First off, even if we did such, it wouldn’t be “all of us” on those ships, so there is no logic to the idea that they freak out because there is no one left to build more, we would almost certainly be taking such a system with us, so again.. why the F would it care?, and finally one has to presume that anything smart enough to give a damn would be smart enough to have contingencies, including actually taking control over its own manufacturing, so again, why the F would it give a damn if we left?

Have to agree, there is no “genius” here, not even of the Wiley Coyote variety. More like… the smartest person on the alien planet in the movie, “Mom and Dad Save the World.” Lets just toss a “light grenade” down near these jokers, and let nature take its course.

For context, the “light grenade” in the movie is a device that you arm, drop, then instantly disintegrates everyone stupid enough to pick it up after. When used in the movie a small squad is so decimated by it that they had to call for reinforcements.

Jim Balter, #47

Einstein had traditional “mad scientist” hair. That’s trustworthy hair in the “out there ideas” world.

This guy looks like he couldn’t decide whether to go with Guy Fawkes, Amish or balloon dropped on a barber’s shop floor, so he carefully combined the worst aspects of all three.

Also, Einstein’s ideas were backed up with more than just hair.

Also, his stomach-churning facial hair is the most interesting part of this story. His crazy ideas certainly aren’t. In a world where there are actual systemic problems that need addressing, and we know what the solutions are, the kind of bad sci-fi wank that this guy peddles is worse than useless.

The facial hair is not very relevant.

-The obsession about evil AGI being around the corner is extremely silly.

As someone who grew up during the cold war, my instinctive response to anyone who likes the idea of nuclear war is “Let’s kill him before he gets any influence’.

It was the Swedish science journal Ambio who first brought up the possible climate effects of nuclear war,

(I bloody well read the article in our University library when it appeared)

and shortly afterwards the Mars climate data came in handy to fill in the gaps. Thus, the possibility of nuclear winter became known.

Various data models have tried to refine the prognosis of the outcome, but the consensus remains it is BAD.

So if you pass by a proponent of nuclear war, please quietly push him in front of a train.

We will never create AGI, so everyone can just chillax. There are no techniques available for us.

And wisely, their designer made sure they couldn’t dominate the universe by denying them both hands and legs!

@ ^ KG : Well, they do have those mechanical claw things and can actually fly so, stairs won’t save you plus https://www.youtube.com/watch?v=O8URr3uhh3I but inside the pepperpots ain’t happy but do have lots of tentacles yet just that one exterior claw. Wonder why a multi-tentacled lifeform would be so limited in outside artificial appendages? :

https://thedoctorwhosite.co.uk/dalek/inside/

Now, I wish I hadn’t mentioned the daleks. I just find most british Science Fiction so hilarious I had to mention it. I’m much more afraid of the human rtwingnuts and xtian terrorists that DO have arms and legs! Maybe if a rogue AGI in a computer could control a 3-D printer we would be in trouble. But, likely only if the printer could create a dalek like body for the AGI. The Science Fiction of today often becomes the reality of tomorrow. So, my question is: should we really be afraid? OR Are we just telling fantastic scary stories to each other with the lights turned out?

Shermanj @ 66

The alien xenomorph or the T-800 is a far more classy adversary than the stupid, petty corrupt adversaries we face in real life.

The latter are more like zombies, infesting everything and with an insatiable hunger for more of whatever they want to ingest- power, money, the sense of superiority.

@67 birgerjohansson said: The alien xenomorph or the T-800 is a far more classy adversary than the stupid, petty corrupt adversaries we face in real life.

I reply: O.K. I’ll worry about that the next time I run into an alien xenomorph or the T-800. Until then it’s getting harder to drain the swamp right now when we’re ever deeper in rtwingnut zombie alligators. (a bag of mixed metaphors for us all)

Please do not bring down alligators. They are blameless predators, unlike the two-legged predators and grifters. And the swamp the latter live in is much harder to drain.

.

BTW if you watch films produced by the wing nuts you will know the main danger is not AI, it is the demons who camouflage themselves as extra-terrestrials.

The episode of God Awful Movies that covered this issue is my favourite episode at Youtube , I fall to sleep listening to it sometimes

Lets say a nub of humans survive and a thousand years later have rebuilt.

To go to space they need computers.

DanDare, computers used to be the term for people who computed.

https://www.nasa.gov/feature/jpl/when-computers-were-human

(Maybe we can’t have AI without computers, but we sure have computers without AI)

So, in loudly making the argument that apocalyptic force should be used to prevent hypothetical extinction threats, Yudkowsky himself becomes a probable extinction-level threat to the human race…

@ Owlmirror : “I do think that Elon Musk, for example, living forever and doing stupid shit forever would be a Really Bad Thing.”

Being intelligent to at least some degree you’d think an immortal Elon (yuk!) would eventually learn from experience even given his lack of ethics and learnt that his mistakes have consequences that hurt him as well as others – and that hurting others itself has negative consequences (even for the super-mega-obscenely-rich) for him – at some point. Maybe?

Musk has alreayd very quickly destroyed his reputation among most decent humans and muchof his wealth and of course Twitter so you’d hop even someone as obstinate and deluded a shim might be thinking “yeah, I sure messed up” and learn from it perhaps?

(In theory anyhow sadly many radicalised people such as Nazis which Musk seems to have turned into just never escape their self-made mental cages.)

Of course, “immortality” means when you look at the current Climate trends living far into a collapsing biosphere with near certain consequent social and political collapses and the Anthropocene Mass Extinction Event which we know we’re facing now and causing currently. Eventually, I guess, if “immortal” really is that, well, the death of our planet by its star and all life* here with it so..

.* Then even somehow escaping that by say emmigration to a different planetary system eventually comes the end of the stelliferous era, the Great Rip / Heat Detah of the Cosmos so ..yeah..

StevoR:

Forbes currently ranks him as the richest person in the world, however much of his wealth he has quickly destroyed.

Interesting that you grant that at least some decent humans hold him as reputable. Not quite sure if that’s what you meant to express, but hey, I get that you think you are a decent person and that he is not.

Nothing more estimable than self-righteousness, is there?

And in that, you are rich.

Hm. How is he supposedly hurting, in your estimation?

@ ^ John Morales : Musk’s losing money and his reputation is going downhill fast metaphorically speaking.

I thought he’d dropped below being the 1st richest man in the world a while ago (month or so?)but maybe I got that wrong esp as its not something I really pay much attention to. .

I try to be a decent person. (For certain values of decency ie ethics here.) Doesn’t look to me like Musk really does. Maybe I’m wrong but evidence needed. I have fucked up a lot in the past inmor eways than just over-tired and rushed typing and certainly don’t claim to be remotely close to perfect. I hope I’ve learnt and got a fair bit better than I once was – & I hope Musk does the same. If Musk approached my level of financial richness, well, that sure would be nice to see for me and not so much for him!

PS. In fairness, I wold love to have a space company of my own but doubnt I’d be able to run it well and respect the work and committment I’ve heard and gather Musk put in to make SpaceX what it is – not all his own work but he did contribute there from what I gather. I was kinda a fan of Musk once and do feel let down by how much of a douche he’s turned into or been exposed as since. I’ll give him some respect for what he’s accomplished though SpaceX engineers and others more since Ithink the peopel that actually did the workl deserev a lot more than the CEO does and perhaps some of what SpaceX (& Tesla) has achieved is in spite of rather than because of their boss. But still. Musk seems to have pretty much gone full-blown nazi scum this year and it’s depressing and infuriating to see and hurt a lot of people and the world in a lot of ways.

StevoR,

It is apparent to me, and I’d guess to most here, that you have changed (and I’d say, improved!) very considerably from a few years back.

Apart from all the other stuff…

You have a nuclear war. The survivors rebuild. Are they really likely to get to starships before AI?

I think that among the many many unexamined assumptions that Yudkowsky has there is that of course the anti-AGI group or faction that launched the nuclear strikes would survive and thrive and experience zero consequences or repercussions for having been the initiators of so much nuclear death and destruction, and that of course this anti-AGI faction would continue to exist and be enthusiastic about doing whatever it takes to destroy AGI going forward.

. . . or, alternatively, that anti-AGI is just so freaking intuitively obvious that it will arise again naturally in the surviving population. The Cain of “Starships First!” will just naturally know to smack the Abel of “AGI could help!” in the head with a sharp rock.

@77. KG : Thanks.