As readers know, I like to take retrospective looks at the New Atheist movement. What can I say, I was involved for ten years and I have grievances. But there’s another adjacent community I think a lot about, even though I was never personally involved: the Rationalist community, also known as the LessWrong community. I also think about Effective Altruism (EA), a significant spinoff community that focused on philanthropy.

I always had issues with the Rationalist community, as well as personal reasons to keep my distance. But looking back, I honestly feel like Rationalism left a better legacy than either the Skeptical or New Atheist movements did, and that legacy came in the form of EA. I keep my distance away from EA, but at the end of the day they’re doing philanthropy, and encouraging others to do philanthropy, and I really can’t find fault with that.

To understand the Rationalist community and its history, I highly recommend the RationalWiki article on LessWrong. (Despite the name, the RationalWiki is unaffiliated, and not entirely sympathetic.) What follows is more of a personal history.

The Rationalist community has been on the periphery of my awareness for as long as I’ve been blogging. I liked to write about critical thinking and LessWrong was one of those critical thinking websites. I later learned LessWrong had a distinctive community, with extensive jargon, and numerous idiosyncratic viewpoints. A lot of these eccentricities can be traced to Eliezer Yudkowsky, an autodidact blogger and charismatic leader. Yudkowsky wasn’t just interested in critical thinking, he was also interested in transhumanism and AI. Yudkowsky’s “Sequences” served as a foundational text for Rationalists.

Before you accuse Rationalism of being a cult of personality, you should know that if it ever was, then it isn’t anymore. Around 2013 or so, there was the LessWrong Diaspora, and most of the community dispersed and diversified. The closest thing to a central blog is Slate Star Codex, but it does not have nearly as much sway as Yudkowsky once did. Among other things, some Rationalists went to Tumblr and got into social justice–you might even like some of them without realizing it.

I kind of hate Rationalism. Some of my reasons are substantial, and some are more petty and personal. For example, I hate that half of their jargon was built from sci-fi references. I don’t care for sci-fi, and have negative experiences with sci-fi geekery. I am not interested in their preoccupation with arguing about AI. They are not as good at critical thinking as they believe they are, which is just a perpetual problem for any community that tries to teach itself critical thinking. And I disagree with their philosophy of trying to calmly argue with bad people, while tolerating them within their spaces. For example the alt-right Neoreactionary movement orbited them for years.

That said, I’ve read enough Rationalist stuff that my outsider status could be questioned. I never read the Sequences, but I read Yudkowsky’s other major work, Harry Potter and the Methods of Rationality (and wrote a review). I read the blogs Thing of Things and The Uncredible Hallq (the latter now defunct). I read the entirety of this Decision Theory FAQ. And I know rather more about Roko’s Basilisk than anyone really ought to know.

I don’t know how EA split off from Rationalism. I suspect it emerged from the Rationalist ethical philosophy–basically utilitarianism, except that they go to great lengths to find every single bullet, so they can bite them. One bullet that Rationalists have been more reluctant to bite, is the idea that maybe instead of enjoying one’s own wealth, one should give it to a good cause. EA, on the other hand, took that particular bullet and ran with it. They won’t give up all of their personal wealth, but they’re interested in giving what they can while maintaining a community that doesn’t immediately scare everyone away.

If a EA person ever asks me why I keep my distance, I just tell them that I have low scrupulosity. “Scrupulosity” is a concept within Rationalism/EA, describing the personality trait of having a very strict conscience. (ETA: This is not correct, see comments.) When it comes to doing the right thing, scrupulous people are optimizers rather than satisficers. They worry all the time that even if they’re doing the right thing, they’re not doing the best thing. I am definitely not like that–if you persuaded me that I was not doing the best I could possibly do, I’d just shrug and move on. I learned about scrupulosity on Thing of Things, and I feel it goes a long way towards explaining the weirdness of EA and why it’s not for me. EA is not just preoccupied with giving to a good cause, but giving to the most efficient good cause. And I’m just not interested in that sort of optimization.

There are three major domains that EA people argue about: global poverty, animal welfare, and existential risk (i.e. the risk of human extinction, aka ex-risk). To outsiders, ex-risk is the most outlandish of the three, and it only gets stranger when you realize that the risk they’re most concerned about is the risk of a malevolent AI takeover. But if you understand that EA still overlaps significantly with Rationalism, which is also preoccupied with AI, it’s not that surprising.

Back in 2015, Dylan Matthews wrote an article about his experience at Effective Altruism Global, and his concerns about the presence of AI ex-risk. It covers a lot of the counterarguments: a) From afar, it looks a lot like tech people persuading themselves that the best way to donate money is to tech research. b) It’s based on speculation about an astronomically low-probability event with catastrophic consequences, and how much do we really understand about such a rare event? I have this crazy notion that putting money into AI research has a tiny probability of making the problem worse, actually. c) It veers into Repugnant Conclusion territory. I will also add d) it implies immense value being placed on unborn people.

To be clear, I’m not against funding AI research, it just seems dubious whether that really belongs in the philanthropy category.

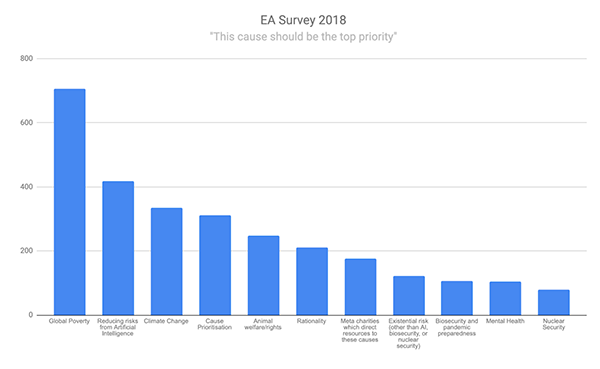

For a quantitative perspective, I found an EA community Survey from 2018. When asked what cause should be the top priority, most people said global poverty, and AI ex-risk is second place.

I know the text is too small, so here’s a transcript. Title: EA Survey 2018 “This cause should be the top priority”. Bars from left to right: 1. Global Poverty, 2. Reducing risks from Artificial Intelligence, 3. Climate Change, 4. Cause Prioritisation 5. Animal welfare/rights, 6. Rationality, 7. Meta charities which direct resources to these causes, 8. Existential risk (other than AI, biosecurity, or nuclear security), 9. Biosecurity and pandemic preparedness, 10. Mental Health, 11. Nuclear Security. I honestly have no idea what “cause prioritization” or “rationality” mean. The biosecurity people are looking vindicated about now.

I don’t like how much time and money they give to AI risk, but at the end of the day, even excluding ex-risk causes, they’re still doing philanthropy. For comparison, look at the speedrunning folks at GDQ, they waste a lot of time and money on something that is objectively useless, but they’re also doing big charity fundraisers, which is more than I can say for most hobbies. What I’m trying to say is that even if some money is considered to be completely wasted on idiosyncratic interests, the project as a whole seems praiseworthy.

Since I got jobbed this year, I too have undeserved wealth, and I’m starting to think about where it could be donated. Although I only pay attention to EA tangentially, some of their values have rubbed off on me. I give GiveWell‘s top charities serious consideration, and I appreciate the aesthetic of the Giving What We Can pledge. I appreciate that EA made me think about these topics, when I’m not really the kind of person who would think about them otherwise.

I notice that their priorities do not include racism and other bigotries (misogyny, homophobia, etc.), or war, despite the fact that these are not only hot topics, but affect an enormous number of people.

I can’t help thinking that this community consists mostly of well-off, highly educated (Western) white people, probably mostly male as well, in countries unaffected by war, and those in the community who aren’t are probably marginalized. In other words, what doesn’t affect them and their friends is off their radar.

Of course, that also applies to the Atheist and SF communities, too, which I gather is where many of these people come from.

@Allison,

While it’s true that they do not (AFAIK) put much focus on racism or other social issues, I do feel that it’s their prerogative. Bringing back the GDQ analogy, I would never criticize GDQ for choosing to donate to Prevent Cancer rather than anti-racist organizations. So judging EA by the same standards, I’m okay with it.

Something that would bother me more, is if it turned out that the EA was failing at social issues in the way it conducts its own communities–for example, if it turns out the community is toxic to people of color. I’m not familiar enough with the EA community to say if this is a problem, but I certainly haven’t heard anything of the sort. They have a reputation for being super polyamorous, and that’s about all I know.

@Allison, in EA, one important criteria for determining causes to focus on is “how neglected is it?” Being a hot topic, so to speak, is a point against having EA focus on such areas.

I’m not super involved in EA (yet—I do plan to be), but I have heard some things about sexism that do make me wary. I’m also fairly uninterested in AI risk, although I don’t necessarily mind the (what seems to me) excessive focus if other topics are still talked about, especially poverty reduction. I do appreciate that the people involved in EA are thinking broadly about many different possible risks, though.

I think the only quibble I have with the writing of this article is the characterization of scrupulosity. It’s not a concept originating from the rationalist/EA communities; it comes from psychiatry. I first heard about it in the as a mental health issue when I went to Catholic school, and then in context of OCD (see https://iocdf.org/wp-content/uploads/2014/10/IOCDF-Scrupulosity-Fact-Sheet.pdf, and also the Wikipedia page, https://en.wikipedia.org/wiki/Scrupulosity). I’m pretty sure Ozy is talking about the same concept.

I probably have scrupulosity-themed OCD. I would not classify it as a personality trait or simply as having a strict conscience (an *overly* strict conscience is a better descriptor). I like the Wikipedia page’s description: scrupulosity is having pathological guilt. It’s an illness, and in fact makes me a worse person: it saps my energy uselessly, which makes me less able to be kind and caring to others around me without giving me any added benefit of being more moral or whatever. I would be careful to distinguish scrupulosity from simply having an optimization mindset when it comes to morality—the two groups of people do overlap a lot (it might be accurate to say that scrupulous people are a subgroup of people who have an optimization mindset about morality), but they’re not the same. Scrupulosity is a bad thing, as Ozy says in the link.

(I guess the thing is this article makes it sound like “scrupulosity causes people to care about optimizing morality” when it’s really the other way around: caring about morality a lot + being vulnerable to mental illness can lead to scrupulosity. Re OCD especially, it targets values its victims care about; if someone has scrupulosity-themed OCD, that means they care a lot about morality —> their illness makes them obsess about it excessively.)

I’m glad EA has made you think about charity! Thank you for writing this.

@kernsing #3,

I do not claim that scrupulosity originates with Rationalism or EA, merely that it is an extant concept.

There seem to be two adjacent meanings of “scrupulosity”, one referring to a pathological trait, and the other referring to a personality trait that exists on a spectrum, but which is not necessarily pathological. Based on your citation to Wikipedia, the pathological definition is more legitimate. On the other hand, what I’m trying to say here, is that I do not merely lack the pathological trait of scrupulosity, but that I’m on the other end of the spectrum. So, I don’t know how you would prefer I communicate that information, but I have found that telling people I have low scrupulosity gets the idea across effectively.

@Siggy, Ah, you’re right that you never claim scrupulosity originates with Rationalism or EA, sorry about that. It just seems weird to me to talk about scrupulosity without also talking about the mental illness aspect.

I think “I am not a very scrupulous person” is a better way to communicate it. “Scrupulous” the adjective doesn’t have the same pathological connotations as “scrupulosity” the noun (to me at least). But if it already gets the idea across, I guess you don’t have to change how you speak.

Re my previous comment: “scrupulousness” is also not a word associated with mental illness, I think, and it’s also a noun like “scrupulosity.”

Addendum, for clarity: …without also talking about the mental illness aspect, when that is what Ozy’s post is about.

(Is there no way to edit comments on here? I know there’s a grammatical error in my first comment.)

@kernsing,

I want to explicitly admit error here, and thank you for the correction. I think what I said in the post is just wrong, and a case where I did not do the research.

I was inferring scrupulosity-as-personality-trait from statements like “EA is a very high-scrupulosity community”, which certainly seems to imply it as a personality trait. Whether that’s how it’s being used, or if it’s a misinterpretation on my part, I’m wary of appropriating mental illness terms to describe non-pathological personalities (e.g. using “OCD” to describe someone who just has high cleanliness standards). So I will avoid using it that way in the future.

@Siggy, I can definitely see how you could interpret such statements as referring to a personality trait, although I’d hope they’re not meant to be for the reason you listed (i.e., misusing mental illness terms). In any case, thank you for taking my comments into account! I appreciate it.

On the surface and at first, the ideas of Rationalist community appealed to me immensely. Paying attention to how we think and make decisions and trying to be more rational and less biased about how we perceive the world? Yep, I like that. Count me in.

Except that when I started reading online conversations between these people and the stuff they published, I got disgusted pretty quickly. Here are some reasons why: pickup artistry, evolutionary psychology, belief that IQ test results are meaningful, sexism, arrogance.

For example, I found a discussion thread in which people tried to answer the following question: “Why are there so few women in the Rationalist community?” People speculated that fewer women than men have extremely high IQ, which was supposedly necessary for being able to appreciate Rationalist ideas. They also speculated about evolutionary psychology and how women evolved to like different things than men, basically, the old stereotype that women are more emotional and less rational.

I am merely AFAB, I don’t identify as a woman, but all that crap I read in said discussion thread felt like personal insults for me. I got disgusted rather quickly and could only wonder how these people failed to realize that maybe women don’t like the Rationalist community because of the rampant sexism displayed in this very discussion thread.

I am skeptical of evolutionary psychology per se, but when it is used to justify sexist stereotypes… Ouch. Or pickup artistry… When I was 20, I once got unlucky to spend an evening with a man who tried these techniques on me. On that day I decided that I want to date shy men who are happy with me making advances, because I hate being treated like a piece of meat.

And how these people tried to explain the fact that people of color have, on average, lower IQ test scores… Yuck.

And then there was this overwhelming arrogance I kept noticing. A bunch of people who imagine themselves to be better than everybody else and assume that they can never be wrong, because they are oh so rational.

Of course, in every community there are nice people and there are assholes. It is highly likely that, while randomly browsing texts written by people from this community, I accidentally found the shitty bits written by the occasional asshole you are bound to have in every community. Maybe my opinion about the community got unfairly spoiled due to a random chance that caused me to find the nasty bits.

Also, I couldn’t care less about some highly unlikely doomsday scenario involving artificial intelligence. Especially when mass famines caused by global warming are pretty much inevitable given our current greenhouse gas emissions.

Other than that, the nice parts about critical thinking did appeal to me. I have also enjoyed various rationalist novels, for example, Pokemon: The Origin of Species was really fun to read. I also mostly enjoyed HPMOR.

@Andreas Avester #10,

Yep, that sounds like the Rationalists. Certainly not all Rationalists are assholes, but as I said they have this community value of tolerating bad people in their spaces, so the assholes are basically everywhere. I try to accommodate people who disagree with me too, but they take it to an extreme, and now I always worry that if I tolerate trolls too much I’d fuck it up as badly as Rationalists do.

In contrast to Rationalists, I have a better impression of EA. They have more of an incentive to not be assholes, because getting more people in EA means more philanthropy. But again, I keep my distance, so it’s hard for me to judge.

I kind of hate Rationalism. Some of my reasons are substantial, and some are more petty and personal. For example, I hate that half of their jargon was built from sci-fi references.

Wait, are they trying to show the validity of the post-modern critique of rationality – namely that it’s culturally determined?

You clearly have spent more time reading this stuff than I have, so I will ask: Is it just tolerating bad people in their spaces or is there bigotry and opposition to social justice deeply rooted in the generally accepted values of the community itself as a whole?

Consider, for example, this gem about eugenics:

https://web.archive.org/web/20130812211250/http://lesswrong.com/lw/f65/constructing_fictional_eugenics_lw_edition/

I think that’s hard to answer, or maybe it’s too poorly defined a question. I mean, I’m much more familiar with the atheist movement, but I still wouldn’t be able to answer such a question for the atheist movement. On the one hand bigotry was and is an enduring element of the atheist community; on the other hand a lot of us seem to have avoided being bigots without uprooting our entire value system or anything like that.

Although I think part of the problem is they tolerate bad people in their spaces, it’s also true that there are also major issues with typical and leading viewpoints. I couldn’t find concrete evidence that Yudkowsky supports eugenics, but a quick check showed that Scott Alexander (aka Yvain, and author of Slate Star Codex) does support eugenics.

I’m a pretty big advocate of effective altruism (though I’m not part of the online community). Though there’s a lot of interesting psychology research on why it’s not a generally appealing philosophy. I actually have an article coming out (hopefully) soon on this very topic. I’ll send it to you once it’s published.

@Alan,

I’d love to read your article. Don’t worry about notifying me, I have rss.

Certainly. But that lack of emphasis is IMHO a strong indicator that their community does not include people from the demographics affected by those issues, or people who spend much time with people who are.

I also can’t help suspecting that it is not a coincidence that those people are missing. Many, many subcultures in the Western world, such as the atheist and SF and (from what Andreas Avester says) the Rationalist communities, have earned a reputation for being bigoted and hostile to people who are not Western, white, well-off, and male. Why would I assume that the EA community is an exception?

I also can’t help thinking that if these philosophies attract so many people of that ilk, maybe there’s something wrong with the philosophy.

@Allison #17,

One observation that came up when I linked to this on my social media, is that EA is very clearly willing to help people who are demographically different from themselves, insofar as they are mostly people in the US mostly working to help third world countries. They are fiercely global in their perspective, and I think that’s a good thing.

It was also observed that EA is willing to, and has discussed social and political issues as possible cause areas, for whatever its worth. But there seems to be a consensus that these are not top priorities though–except mental health, apparently? I also wouldn’t be surprised if they decided that the most efficient way to donate to anti-racist causes was some organization in like, Africa or something. The preferred org for mental health seems to be StrongMinds, which operates in Africa.

I don’t find speculation about the hypothetical racism of a community to be useful. We could make that speculation about literally any community, and we’d be right most of the time too.

OK, you are right, that was a hard question to answer for any community.

In order to actually support eugenics in the real world, you’d have to be utterly bigoted, totally rotten, and have zero respect for human rights.

What bothered me in this discussion thread were the underlying attitudes that were seeping from the writings of these people who probably (or hopefully) don’t actually support eugenics in the real world due to the obvious human rights issues.

This was supposed to be an imaginary society that practices eugenics without being dystopian. Yet I perceived it as immensely dystopian. Yudkowsky probably imagines himself in such a society with a harem of women all of whom want his sperm. Under Rawls veil of ignorance, I imagine myself growing up as a child who is denied equal financial resources (and probably gets worse education) only because my parents scored too poorly in an IQ test. I imagine myself as being labelled as a less valuable citizen even before my birth long before I have taken the first IQ test in my own life.

There were numerous terrible premises in this discussion thread:

1) IQ is genetically hereditary (no, it isn’t).

2) Inborn intelligence can be reliably measured with an IQ test (false, people get better scores in an IQ test after having had access to good education).

3) Accomplishments like getting a Nobel are a reliable proxy and mean that a person has good genes and should donate their sperm or eggs so as to have lots of biological descendants (maybe instead they got their Nobel due to being lucky to have access to education and opportunities in life?).

4) It’s appropriate for arrogant assholes who pretend to have gotten a high IQ test score to ponder about how to prevent dumb people from breeding.

5) In the comment section there was a lot of patronizing talk about how poor people are dumb, cannot plant ahead, make unwise decisions, etc.

6) In the comment section there appeared the belief that you can eradicate poverty “by multiplying the ranks of those who can form productive enterprises” once all those dumb people stop having kids.

In my opinion, the kind of conversation that happened there could not happen in a community in which values like anti-bigotry and social justice are the norm. This is why I had gotten the impression that in this community problems could run much deeper than merely tolerating the presence of bigots.