I’ve read a few introductions to Mastodon in my time, and none of them hit the right notes. Here’s my attempt to play that tune. This song is pretty long, so I’ve split it in twain.

ActivityPub: The Coin of the Realm

The early days of the internet were defined by the afterglow of Cold War paranoia and opinionated programmers. Take Usenet: it was a decentralized messaging service that allowed anyone to talk to anyone else on a specific topic. In recent years it’s been reduced to a distributed file storage system, but for a while it was the place to chat. Its older cousin email is more targeted, but no less decentralized.

The rise of personal computers and the dot-com boom brought the internet to everyone. You didn’t need to use an institution’s terminals to access the internet, nor their bank account to pay for an expensive server to hold your messages. In theory, anyone could set up a Usenet or email server on the cloud and have their own personal fiefdom.

But the flood of cash also led to a rush of land claims. Facebook would be all too happy to run servers and manage messages on your behalf, provided you let them read at least some of your data and show you advertising. By adding useful features they could attract a critical mass of members, who would provide content that attracted more members (on their own dime, no less!), creating a feedback loop that turned platforms like Facebook into de-facto monopolies. By deliberately not playing well with others, these monopolies also raised the cost of exit; you wouldn’t want to be cut off from your friends and family if you closed your account, now would you?

Those opinionated programmers spotted this problem ages ago, and have been coming up with solutions. Their primary weapon was a standardized protocol for decentralized socialization, and in the past decade and a half an abundance of weapons have been forged: OStatus, Diaspora, Zot!, ActivityPump, and Mim have been the bigger players in this space. ActivityPub is a relative newcomer, but nonetheless it has rapidly become the weapon of choice.

Consequence: Minimal Attention Economy

Open social media protocols have a huge advantage. Consider Tumblr, who announced they were going to add ActivityPub support to their media site. That’s great! It’ll add millions of new users to the ActivityPub community.

But this sword is double-edged. What incentive is there for me to stay on Tumblr, which is paid for by attention-grabbing ads, if I can substitute different software that allows me to view and interact with everyone on Tumblr but minus the ads? Tumblr’s developers may invent some killer feature to keep people using their platform anyway, but you can see how an open social media protocol kills earning money via engagement. That’s a good thing, given that’s inadvertently encouraged the spread of hate speech online.

There are still bills to pay, of course, but those giant investments by tech companies have cut them down to practically nothing. Elon Musk spent $44 billion to own his own social network, and needs about $6 billion per year to maintain it; I’ve spent about $35 to gain total control over a slice of ActivityPub, and currently it’s costing me about $120 per year. If you lack my technical knowledge, you can instead purchase your own instance for as little as $72 per year. This dramatically shifts the funding model away from an attention economy towards a collectivist one, and in the process tosses cold water on the outrage machine.

Mastodon: The Door of the Realm

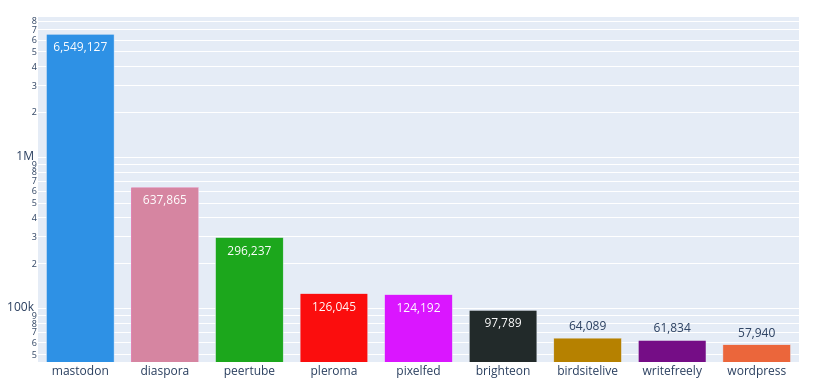

As implied above, we don’t have to wait for this mythical software package to be written. There’s actually quite a few available right now, including PeerTube, Pixelfed, Writefreely, diaspora*, Matrix, Pleroma, Misskey, Friendica, Funkwhale, and even WordPress. You can launch these packages on your own server, creating an “instance” of that flavour of social media. Some of these support multiple social protocols, creating a “fediverse” of instances who communicate or “federate” with one another. The mammoth of this space, however, is the ActivityPub-exclusive Mastodon.

How has Mastodon become so dominant? I can float a few theories. Even techies will usually prefer the easiest path to their goal, and out of all the ActivityPub implementations I’ve tried Mastodon was the easiest to set up. The network effect works equally well on decentralized social media, so a tiny user count advantage could easily snowball into total domination by one software package. As for migration, Mastodon makes it very easy to move from one instance to another, as well as archive all your personal data. I spotted someone with 25,000 followers on Mastodon marveling at how they moved instances without the vast majority of their followers noticing; within twenty four hours, all but roughly three thousand had silently un-followed the old account and re-followed the new one, with no effort on their part.

How has Mastodon become so dominant? I can float a few theories. Even techies will usually prefer the easiest path to their goal, and out of all the ActivityPub implementations I’ve tried Mastodon was the easiest to set up. The network effect works equally well on decentralized social media, so a tiny user count advantage could easily snowball into total domination by one software package. As for migration, Mastodon makes it very easy to move from one instance to another, as well as archive all your personal data. I spotted someone with 25,000 followers on Mastodon marveling at how they moved instances without the vast majority of their followers noticing; within twenty four hours, all but roughly three thousand had silently un-followed the old account and re-followed the new one, with no effort on their part.

Myth: There are No Nazis on Mastodon

One thread I’ve spotted suggests Mastodon brought something new: the ability to ban other instances easily. Whether true or not, instance banning has greatly contributed to the impression that Mastodon and the fediverse don’t have any nazis.

A moment’s thought suggests there should be tonnes of nazis. Mastodon has all of the usual moderation tools you’d expect, but of course there’s very little stopping a nazi from spinning up their own instance and becoming their own moderator. In addition to blocking individuals, though, Mastodon users can also block an entire instance, preventing any user of that instance from showing up on your feed. Moderators have finer tools available, and can choose between limiting visibility so users can only see people they follow on that “silenced” instance, or just outright suspending the offending instance and rendering it invisible from that vantage point.

This creates a race to the bottom. Banning an entire instance is about as easy as banning a single user, so setting up an instance to dodge a ban is a waste of time. More importantly, there’s reason to think tolerance of bigotry encourages bigots while seeming innocuous to non-bigots, and this tolerance will help intolerance spread. Instances that merely tolerate nazis will often find themselves banned and isolated from sections of the fediverse that don’t, under this reasoning. Non-bigoted users who are feeling cut off as a result will migrate away from the nazi-sympathetic instance. This concentrates the nazis into camps, where they can goose-step around with each other as much as they wish but can’t radicalize others. If you happen to create an account on a well-moderated instance, in contrast, you’ll be blissfully free of nazis.

This isn’t a mere thought experiment. In 2019 Gab switched from using their own code to Mastodon’s, and as a side-effect they joined the fediverse. Tens if not hundreds of thousands of far-Right supporters flooded the social network of millions of normies overnight. The result was exactly as I outlined above: Gab’s instances were blocked by almost everyone, and the fediverse carried on as before. Eventually, Gab voluntarily exited the fediverse, citing the lack of federation as one reason.

Consequence: Distributed Moderation

Every social media platform needs moderation standards and ways to enforce them. The ideal approach is the “benevolent dictator” model, where an omniscient hyper-intelligence free of all bias lays out the commandments. On paper, centralized social media like Twitter come closest to this: a team of well-trained employees hash out the rules and talk among themselves to decide tricky cases.

In practice, the results are far from the ideal. It’s easy to point to the Elon Musk era of Twitter, where someone who believes women are property was reinstated to the platform, and even after he was arrested for suspected kidnapping and rape Musk is tweeting out the perp’s memes. But a less extreme version of this was true well before Musk. Omniscient hyper-intelligences don’t exist, people do, and all people have their flaws and blind-spots. Training and committees can help with this, but they only go so far, and even the best system is pretty useless if the moderation team is underfunded and/or understaffed.

The fediverse solves the moderation problem by going in the opposite direction. Anyone can become a moderator, and any space can be moderated however the mods choose. Want to allow nazis to roam? Go right ahead! Are you A-OK with questionable porn? Then let it flourish on your instance. Want to force all posts to use only the letter “E”? It’s your instance, you can do what you want to. In return, though, any other instance is free to block or limit yours. The end result is a tattered fabric of federation, where almost all moderation standards exist somewhere on the fediverse but most of them are invisible to you. Your choice of instance sets the baseline for how moderated your experience is, and you can use the mute/ban tools to lock it down further.

This approach scales extremely well. As complaints grow about the moderation of larger instances, some of the disgruntled spin up their own instances, become moderators themselves, and set their own moderation policies. If these new instances grow too large, the complaints against them pile up and more mods join the fediverse. The number of mods scales with the number of users, and is only limited by how hard it is to set up and maintain a new instance. Under-moderation isn’t a problem.

But if anyone can set up their own instance, and the typical person is a little sexist/racist/etc., then the typical mod on the typical instance is a little sexist/racist/etc. There’s no employee training to help minimize that, and the only committee who could push back is your fellow mods. It’s very easy to create a walled garden where casual bigotry flourishes, and an outsider might not discover that until they’re inside the walls. You could try browsing the public timeline of an instance to audit their moderation, but there’s tens of thousands of instances federating around and some hide their public timeline.

This terrible thicket of instances is an awful experience for newcomers, and often leads to them stumbling onto some form of bigotry, contrary to what they were originally promised. I’ve read a number of solutions to this, and they’re all variations on the same idea: we should appoint a benevolent dictator, or more commonly a team of well-trained volunteer moderators. Presumably, they’d wall off the garden to prevent casual bigotry from leaking back in under their enlightened leadership. For my part, I’d rather not promise new users a nazi-free experience, and instead give them advice on how to use word of mouth and hashtags to find a suitable instance.

Consequence: Searching is Limited

Given this terrible thicket, how would you implement searching? I’ve studied distributed algorithms, so I know the answer is “not very well;” tens of thousands of instances searching one another would cause an overwhelming amount of bandwidth and introduce a tonne of latency. Adding a caching system would chew up tonnes of memory and CPU usage, and almost certainly lead to stale entries clogging up the results. Individuals could no longer set up their own instances.

Preventing all forms of search is a terrible alternative, but there’s some middle ground. First off, give up any notion of searching the entire fediverse. At best, your server can only index other servers it knows about and hasn’t blocked. Second, an instance already collects and caches ridiculous amounts of data during federation. By limiting search to locally-cached data and permitting a few stale entries, we can eliminate the need to spam traffic. Third, usernames don’t take up a lot of room and persist for a long time. Aggressively caching those is very doable.

That’s still very limited. A case study: I typed “angry” into the search bar of my primary instance, and got back the users Imani Gandy (angryblacklady, who I follow), as well as Angry Staffer, Angry Waterman, and many more that I don’t follow. Every post returned was one that I’d starred or boosted, however. And I’m lucky, keyword searching is an optional feature that adds quite a bit of server load, so many instances leave it disabled.

So let’s add a fourth bullet point: hashtags. These take up about as much storage space as usernames, so we have the room to track their per-day usage. When federating, we can query the most active hashtags on our partner and archive the tagged posts locally. Hashtags that haven’t been used in a while can be safely pruned from our local database, as by definition few people are using them.

Back at our case study, by switching to the “Hashtag” tab of search and leaving the field blank I can see that “#Book”, “#TwitterExodus”, and “#Trans” have been popular topics in my slice of the fediverse recently. There’s also a handy graph next to each that shows none of them are going viral or fading out. Searching for “angry” shows a handful of hashtags that are currently sitting at zero uses. If I go back and select the “#News” hashtag, I’m greeted with posts about a storm hitting North California, news of potential explosions in multiple Russian cities, Brazilian indigenous communities using drones to monitor rain-forest destruction, and the latest interview transcripts to drop from the January 6th committee. I also see writers summarizing their biggest stories of 2022, a list of journalists to follow, and a post stating that Mastodon will never replace Twitter as a news source. None of that came from people I follow.

Finally, a search I can put to use! Suppose I’m a new user who doesn’t know which instance to pick, and nobody’s given me tips on where to start. My first step would be to sign up with the biggest instance that’s allowing open sign-ups, then start searching for hashtags relevant to my interests. If I search “#Trans”, for instance, I don’t see any recommendations for LGBT-hostile servers to join, nor any warnings of where to avoid. I do see a lot of transgender people, though, so I’ll keep track of which servers they’re on. Many are on larger servers like “mastodon.social” and “mastodon.lol”, but after some scrolling I see people on “tech.lgbt” and “glammr.us“. That last one has a nifty URL, and when I bring up their About page I discover they’re “for folks interested in galleries, libraries, archives, museums, memory work and records.” Just my kind of people, and best of all they’re a small instance open to new members. I check their public timeline, and get an HTTP error that says I need to be logged in. That limits my auditing options, but on the plus side suggests they’re interested in preserving my privacy. I set up an account there, then go into my preferences on my big-instance account and tell it to transition to the new account. Fabulous!

You’ll hear that Mastodon doesn’t allow full text search because it was a tool of harassment, and that’s half true. On Twitter it was common for bigots to fire off text searches, then mob any new result that came back. It’s led to an arms race of aliases for dodging around search, like typing “Melon Husk” instead of “Elon Musk”. On Mastodon, search is “opt-in”: the odds of being mobbed is minimal, unless you manually include a hashtag in the post. It doesn’t reduce the odds to zero, but existing moderation tools can handle those situations. In practice, the hate mobs that happen on Twitter just don’t happen on Mastodon, despite all the nazis marching around.

But the real truth is that full text search on decentralized networks is terrible, for all definitions of that word. The reduction in hate mobs is just a welcome side effect.

[part two will be linked here, once it goes live.]

[2023-01-21 HJH: I should have included a link to a server directory when I talked about finding a server. Now fixed!]