[I know, I’m a good three months late on this. It’s too good for the trash bin, though, and knowing CompSci it’ll be relevant again within the next year.]

LAURIE SEGALL: Computer science, it hasn’t always been dominated by men. It wasn’t until 1984 that the number of women studying computer science started falling. So how does that fit into your argument as to why there aren’t more women in tech?

JAMES DAMORE: So there are several reasons for why it was like that. Partly, women weren’t allowed to work other jobs so there was less freedom for people; and, also… it was simply different kinds of work. It was more like accounting rather than modern-day computer programming. And it wasn’t as lucrative, so part of the reason so many men give go into tech is because it’s high paying. I know of many people at Google that- they weren’t necessarily passionate about it, but it was what would provide for their family, and so they still worked there.

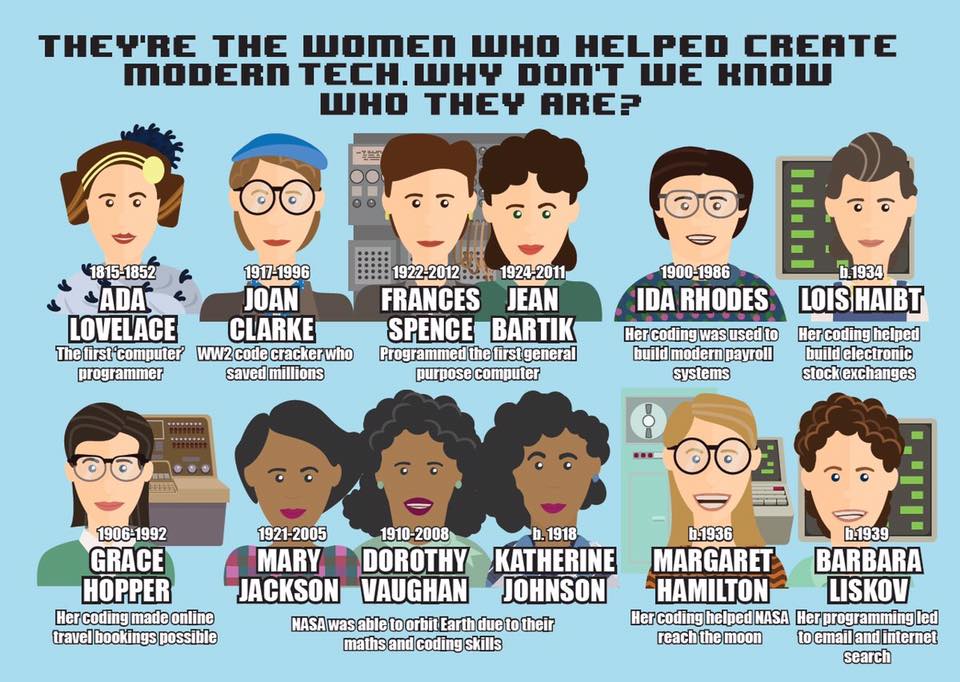

SEGALL: You say those jobs are more like accounting. I mean, look at Grace Hopper who pioneered computer programming; Margaret Hamilton, who created the first ever software which was responsible for landing humans on the moon; Katherine Johnson, Mary Jackson, Dorothy Vaughan, they were responsible for John Glenn accurately making his trajectory to the moon. Those aren’t accounting-type jobs?

DAMORE: Yeah, so, there were select positions that weren’t, and women are definitely capable of being confident programmers.

SEGALL: Do you believe those women were outliers?

DAMORE: … No, I’m just saying that there are confident women programmers. There are many at Google, and the women at Google aren’t any worse than the men at Google.

Segall deserves kudos for getting Damore to reverse himself. Even he admits there’s no evidence women are worse coders than men, in line with the current scientific evidence. I’m also fond of the people who make solid logical arguments against Damore’s views. We even have good research on why computing went from being dominated by women to dominated by men, and how occupations flip between male- and female-dominated as their social standing changes.

But there’s something lacking in Segall’s presentation of the history of women in computing. She isn’t alone, I’ve been reading a tonne of stories about the history of women in computing, and all of them suffer from the same omission: why did women dominate computing, at first? We think of math and logic as being “a guy thing,” so this is terribly strange.

David Alan Grier‘s book “When Computers Were Human” contains the answer. We have to head right back to the very first computers, all the way back to 1757. Alexis Claude Clairaut decided to take on a very ambitious task: predicting exactly when Halley’s comet would appear in 1758. This meant grinding through an immense number of iterations of Euler’s method. Clairaut enlisted two mathematical friends to help with the calculations, Jérôme Lalande and Nicole-Reine Lepaute. This was a very controversial move, but not because one of the team was a woman (Clairaut never recognized Lepaute’s contribution, so few knew a woman was involved). Instead, scientists of that era didn’t trust a calculation they didn’t perform themselves. During the ensuing debate, the term “computer” was coined to describe a person who specialized in doing other’s computations.

Women were involved in computing before the term “computer” existed! This story also points out that even if a society was deeply sexist, there were always progressives around who rejected that; in contrast to Clairaut, Jérôme Lalande did acknowledge her role in that computation. He also kept her employed doing scientific work for fifteen years, such as figuring out where Venus would be during a solar transit, plus the location and timing of a solar eclipse.

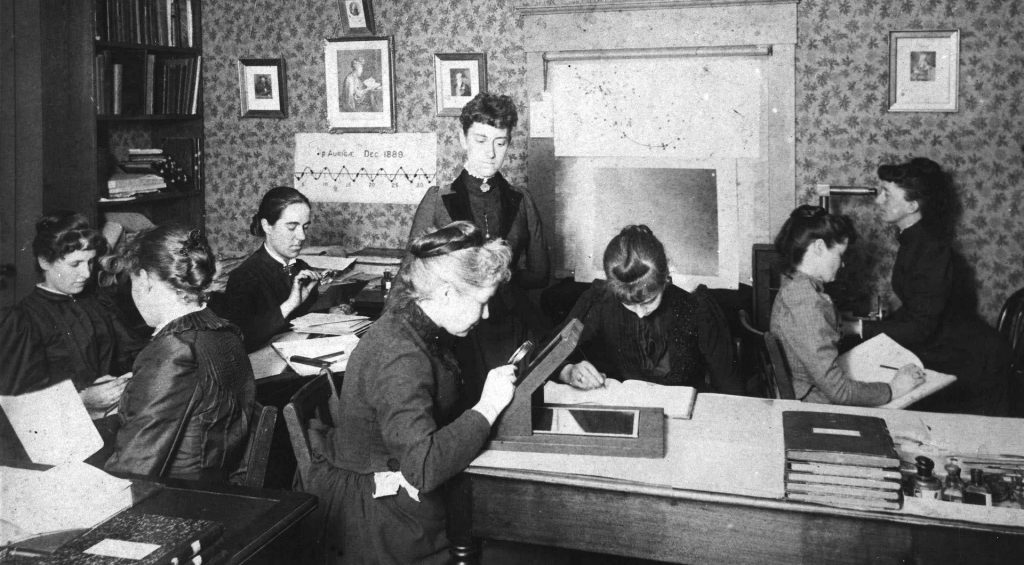

Still, this doesn’t explain women’s dominance in computing. Nearly a century later, for instance, Maria Mitchell was the sole woman in a group of five computers at the US. Nautical Almanac Office; in other words, the majority of computers were men. The key number here is “five,” however; there just wasn’t much demand for computation then, so jobs were sparse and settled via ad-hoc methods. That started to change around 1880, as astronomy embraced photography and started churning out huge datasets. As the story goes, Edward Pickering of Harvard was pissed off at his all-male computers over their shoddy work, so he decided to hire his maid to shame them. Williamina Flemming did that ably, by eventually discovering hundreds of variable stars and dozens of nebulae, contributing greatly to astronomy, in addition to grinding through reduction. Pickering was soon hiring women exclusively, and others followed his lead.

It’s not hard to figure out why. The main reason was price per computation: you could pay a woman half as much as a man for the same work, so you could hire twice as many computers for the same amount of funds and thus double your throughput. Secondarily, men had many mathematical jobs available to them at the time, in both academia and industry, and so the best and brightest chose those over toiling away as a faceless computer. Women did not have those options, thanks to pervasive sexism; the best they could hope for was a teaching gig. Thus the potential talent pool for computers consisted of dull men and brilliant women, neither of whom could move to something more prestigious, and one of which was half as expensive per unit of work.

It’s not hard to figure out why. The main reason was price per computation: you could pay a woman half as much as a man for the same work, so you could hire twice as many computers for the same amount of funds and thus double your throughput. Secondarily, men had many mathematical jobs available to them at the time, in both academia and industry, and so the best and brightest chose those over toiling away as a faceless computer. Women did not have those options, thanks to pervasive sexism; the best they could hope for was a teaching gig. Thus the potential talent pool for computers consisted of dull men and brilliant women, neither of whom could move to something more prestigious, and one of which was half as expensive per unit of work.

Even with those “advantages” in place, mind you, it took a desperate need for computation to flip the gender balance. Bigotry nullified that heavy economic bias for damn near a century.

At the time, the hiring of women computers was justified because it was thought that women were less emotional than men, or did better in school, or were more rational, or had greater attention to detail, and thus were perfect for computation. These beliefs persisted well into the 1960’s.

“It’s just like planning a dinner,” explains Dr. Grace Hopper, now a staff scientist in systems programming for Univac. (She helped develop the first electronic digital computer, the Eniac, in 1946.) “You have to plan ahead and schedule everything so it’s ready when you need it. Programming requires patience and the ability to handle detail. Women are ‘naturals’ at computer programming.”

Those reasons are just as bullshit as modern excuses for why women aren’t cut out to be coders, but they helped people sleep at night. Again, sexism is a helluva drug.

It wasn’t just astronomy, either; the industrial revolution led to a rapid advancement of engineering and the mechanization of war. The military was in dire need of ballistics firing tables to aim their newfangled mortars and artillery, which are quite nontrivial to compute. Science at large was following in the footsteps of astronomy, demanding ever-greater computational power to keep up with ever-greater datasets, to compute numeric tables covering everything from trig functions to critical values for the Wilcoxon signed-rank test.

Speaking of which, I’d like you to get a feel for what it was like to be a computer. I haven’t been able to find any originals, but it isn’t hard for a programmer to whip up some plausible computer worksheets. The numbers are in “exponential” form, where a signed decimal number with absolute value between one and ten (the first nine digits) is multiplied by ten raised to a power (the last two, also signed). Computers sometimes did classified work, which was handled by simply not telling them what all the number crunching was for. In the same spirit, I’m going to do the same.

The experience should twig a memory, though.

JAMES DAMORE: … it was simply different kinds of work. It was more like accounting rather than modern-day computer programming.

The comparison to accounting doesn’t make much sense, if he knew as much about the history of computation as the bazillion think pieces I read. Their starting point was around 1940, a time when women like Betty Jean Bartik and Kathleen MacNulty were working with and designing electronic computers, when women like Kathleen Booth, Betty Hoberton, and Grace Hopper were creating compilers and programming languages. That’s more sophisticated than what the average programmer does today.

But it does make sense if he was aware of the Aberdeen Proving Ground or the Harvard computers under Pickering. Human computers may have toiled away on complex math problems, but these were broken down into many simpler ones that could be done, after a little training, by someone who failed high school. The analogy to accounting is a lot more apt here.

But even this isn’t complete. The typical computer ground away at simple math, and the typical computer was a woman, but it doesn’t follow that women were less capable of math than men. Some women, like Gertrude Blanch, earned the equivalent of a math PhD because it gave them the skills to design their own computations. As they gained seniority, they would become managers of the computer pool and in many cases that meant they would be responsible for translating the complex math into simpler stuff. They were also adding in redundancy checks, to ensure the fallible humans carrying out the computation didn’t muck up the final results.

They were programmers, in other words. It’s telling that when electronic computers arrived, these women became the first people to program those machines; the skills used to manage a pool of human computers translated well into managing electronic ones. Betty Jean Bartik, Kathleen MacNulty, and four other women pulled an all-nighter to get the ENIAC computer running for its first demo, because no one else knew it as well as they did. And yet, all six of them were human computers before they were wrangling electronics.

Unlike the think-pieces he inspired, Damore seems aware of the era where women dominated computation. He has a bullshit dismissal of this, but this pattern keeps popping up again and again in his manifesto; he knows his history, he just omits parts of it that contradict his arguments. Hence why he can seem so well-sourced and articulate, yet contradict himself within the span of a short interview. Damore almost certainly knows his arguments are shit, but he’s also aware that if he garnishes it with a faux appeal to science a fair number of people will swallow it without question.